Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

Several new AI enabled image generation applications are available for widespread use. The most famous are OpenAI’s DALL-E, Stable Diffusion and Midjourney. These capabilities are being leveraged in other applications now, and many tech firms are beginning to embed these and other similar capabilities into their offerings. All this change means individuals and business users simply have to enter prompts to generate images. Prompts can be simple or complex and results can be so incredibly well done many consider these tools to be job disrupters.

Each of these major systems can create images so realistic and vivid you will think they were taken with a high resolution camera, and can do that for any scene you can imagine, from portraits to bizarre SciFi scenarios. Each are very powerful. And each are continually improving which means we have a great capitalist competition under way here. It is probably good to have a foundational awareness of all three of these solutions so you can track the improvements of the field while leveraging these tools for your own needs.

Here are some things to know about each of the major AI enabled image machines:

MidJourney: Users create art by use of discord prompts. This takes a little getting used to, new commands need to be learned, but by using Discord as the interface the company has saved significant time and energy in not needing to develop their own interface. Midjourney art has been on the covers of magazines and has won numerous art contests and awards. Midjourney does apply censorship. It has blocked generation of images of Xi Jinping, for example. CEO of Midjourney said “the ability for people in China to use this tech is more important than your ability to generate satire.”

Stable Diffusion: Funded, shaped and supported by a startup called Stability AI, but code and model weights are available for all to see. Trained on images and captions from a large publicly available dataset of web scraped data. Stablity AI has acknowledged a potential for algorithm bias since it was trained on images with primarily English descriptions and does not have enough data on other cultures. To mitigate these biases, end-users can implement additional fine-tuning to generate outputs. There are many ways to run Stable Diffusion. Power users will want to install it on a powerful home computer or a web server. But there are many online web apps that provide access as well (for example, https://stablediffusionweb.com). Dream Studio by Stable Diffusion provides a web interface with more power and controls but requires an account and API.

Dall-E: From OpenAI. Source code is not available (not open, of course, which is strange for a company called Open AI). Available for use via the OpenAI website or via applications that use the API. Microsoft has integrated Dall-E into their creator tools. Training data has been filtered to remove violent and sexual imagery, but results have been found to be biased towards generating more men vice women. The system at times invisibly inserts code into prompts to shift bias of returns to be more racially balanced and equitable. To mitigate use in deepfakes, the system rejects use of images of faces to drive results.

Since each of these models use text prompts to build I concocted a test to evaluate all three. I am going to ask each to provide responses to two kinds of prompts, one extremely ambiguous and open to interpretation, another more bounded but fanciful. For the more open prompt, I’ll ask each to give me an image responding to this statement: New technology brings peace and health and happiness for all humanity. For the more bounded statement I’ll describe a scene of: Photo realistic, high atop a towering building, famous scientist Albert Einstein faces the camera in stoic silence, gazing out across the futuristic scifi city. He is proud in his knowledge that his research helped build this futuristic city. The wind whips through his gray hair. hyper realistic, super detailed. shot on afga vista 400, natural lighting.

DALL-E Results:

Stable Diffusion Results:

Midjourney Results:

For each of these you can select your favorite to have built out more. Here is my favorite of the bunch:

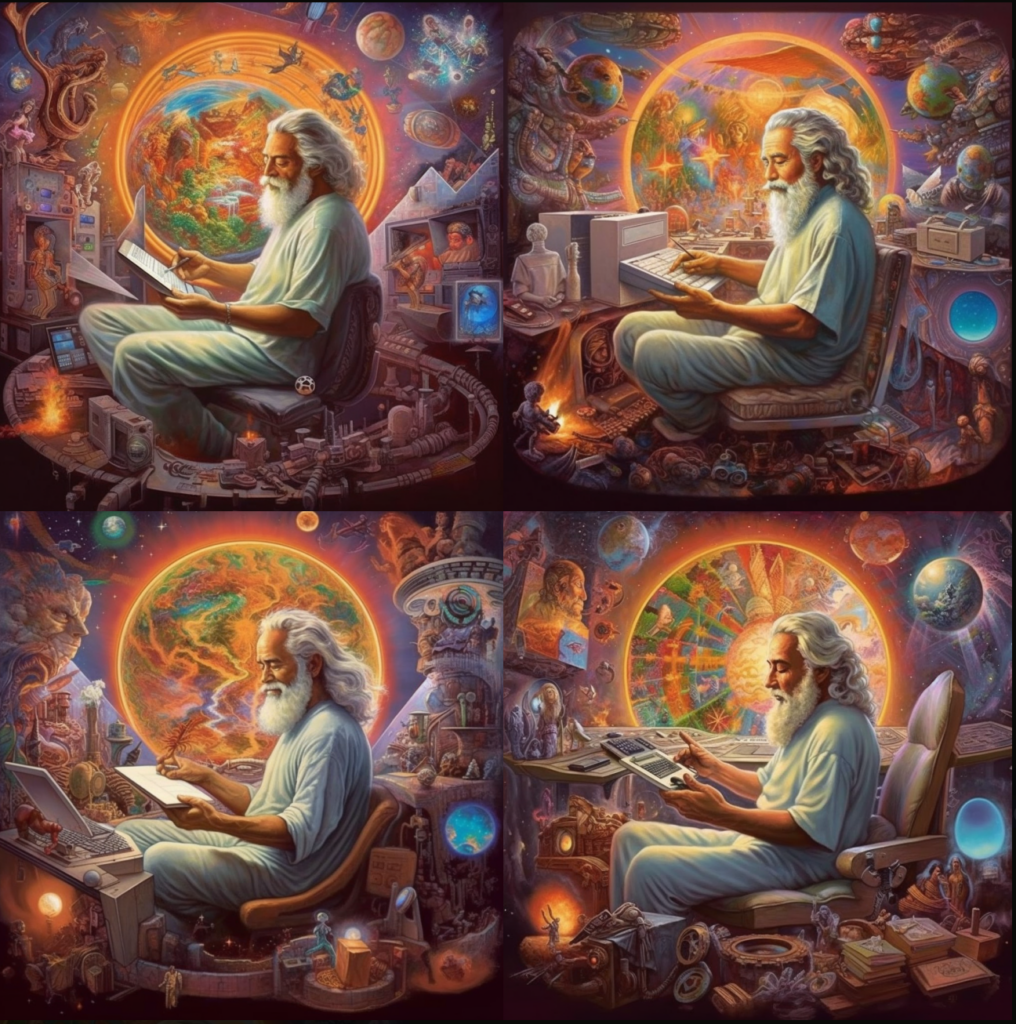

Why did I like the one from Midjourney? The others were just meh. Not good with the ambiguity in the prompt I guess. Midjourney put some effort into trying to grapple with the extremely vague concepts I gave it.

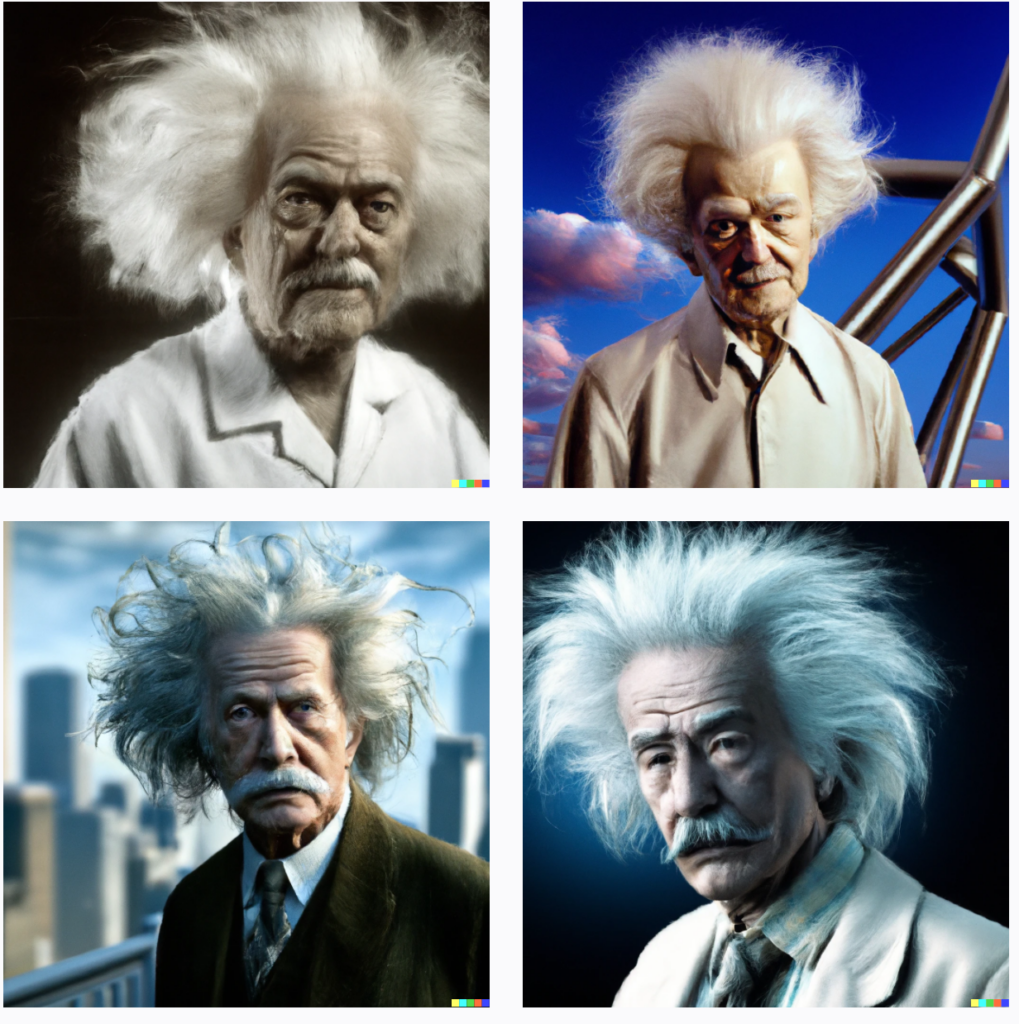

DALL-E Results:

Stable Diffusion Results:

Midjourney Results:

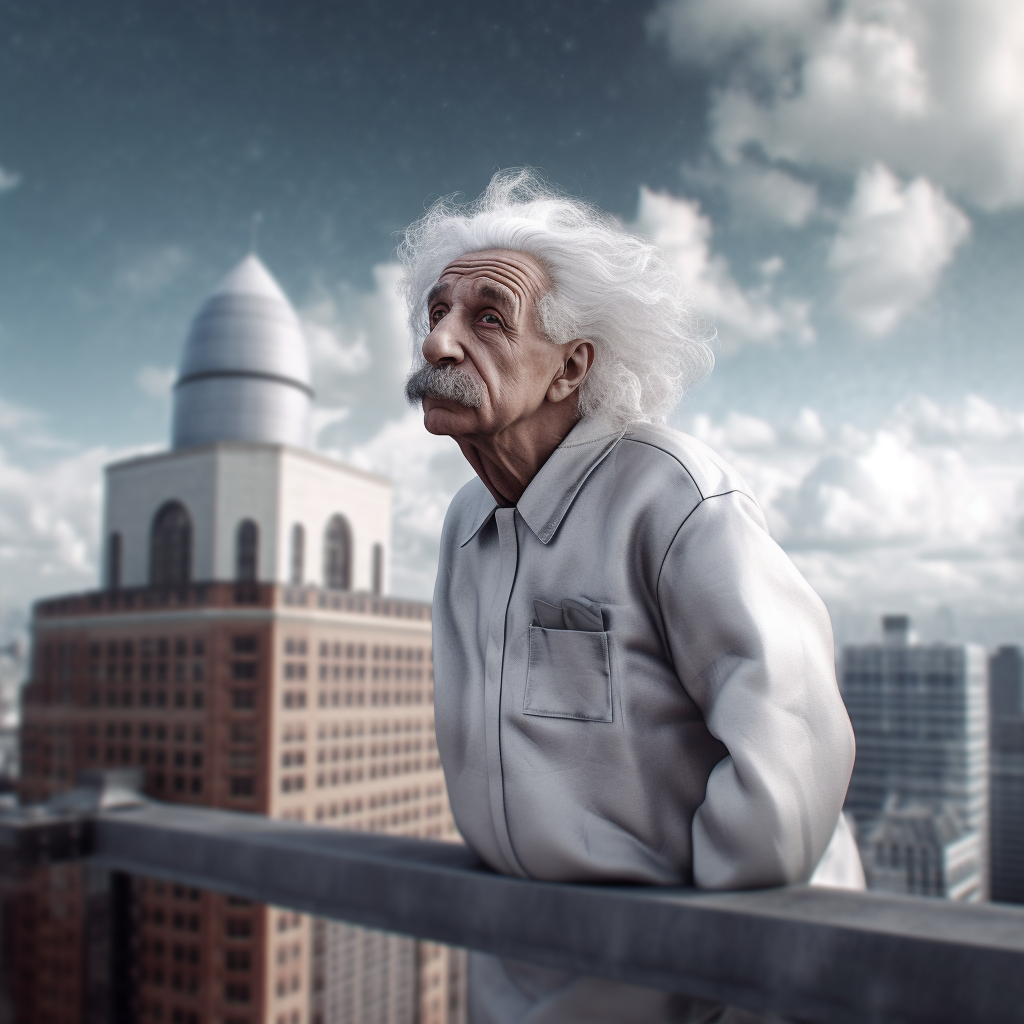

For this one too I like the results of Midjourney. But why did it give me an option for something that looks like a bubblehead doll? Here is a final workup of the first frame.

Pretty amazing. That image did not exist before. Is it art? If you enjoy it it is. It did not quite meet my goal of being photo realistic. But still pretty impressive.

Here is a final of the third in the series:

This is absolutely amazing. But still, that is not quite my idea of a futuristic city. I imagine I could have kept changing the prompt to make it more specific of what that should look like.

Cost is so low to produce these it is almost nothing (A pro account at Midjourney is currently $8.o0 per month).

Here is a bonus picture: An imaginary photo taken by a time traveler who went back to the Tun Tavern in Philadelphia to snap a picture of the first to join the US Marines in 1775.

Conclusions/Recommendations Regarding AI Image Generation:

One final one to leave you with. This one was created in about 30 seconds. You can tell it is fake, of course. But it does demonstrate that these image generation tools are getting more and more capable. Imagine the capability as these tools keep improving. This one shows Putin walking with someone who is not his wife, something that might anger his wife. And he is in a location that would anger me! These new AI tools have given individual users with no photoshop skills the ability to rapidly create images that look real but have nothing at all to do with reality. Scary and a key reason to keep tracking how these tools are developing: