Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

Following is a survey of recently announced GPT platforms of note – culled from the exponential generative AI headlines of the last few months. It is a curated selection of *GPT and *AI platforms for the OODA Loop readership – across a wide swath of industry sectors. From JPMorgan’s IndexGPT patent application to Crowdstrike’s Charlotte AI, survey the fat of the AI land here.

Source code: https://github.com/Significant-Gravitas/Auto-GPT

About: An experimental open-source attempt to make GPT-4 fully autonomous.

#AutoGPT is the new disruptive kid on the block- It can apply #ChatGPT's reasoning to broader, more intricate issues requiring planning & multiple steps.

Still early but very impressive with many health and biomedicine applications.

Just tried #AgentGPT and asked it to… pic.twitter.com/ywFhtjxjYD

— Daniel Kraft, MD (@daniel_kraft) April 12, 2023

What is Auto-GPT and why does it matter?: In essence, Auto-GPT uses the versatility of OpenAI’s latest AI models to interact with software and services online, allowing it to “autonomously” perform tasks like X and Y. But as we are learning with large language models, this capability seems to be as wide as an ocean but as deep as a puddle. Auto-GPT — which you might’ve seen blowing up on social media recently — is an open-source app created by game developer Toran Bruce Richards that uses OpenAI’s text-generating models, mainly GPT-3.5 and GPT-4, to act “autonomously.”

There’s no magic in that autonomy. Auto-GPT simply handles follow-ups to an initial prompt of OpenAI’s models, both asking and answering them until a task is complete. Auto-GPT, basically, is GPT-3.5 and GPT-4 paired with a companion bot that instructs GPT-3.5 and GPT-4 on what to do. A user tells Auto-GPT what their goal is and the bot, in turn, uses GPT-3.5 and GPT-4 and several programs to carry out every step needed to achieve whatever goal they’ve set.

What makes Auto-GPT reasonably capable is its ability to interact with apps, software, and services both online and local, like web browsers and word processors. For example, given a prompt like “help me grow my flower business,” Auto-GPT can develop a somewhat plausible advertising strategy and build a basic website. (1)

For how to create your own Auto-GPT AI agent, go to: https://www.tomshardware.com/how-to/auto-gpt-ai-agent

Source code: N/A (patent application only)

About: JPMorgan Chase filed a trademark application for IndexGPT, a chatbot designed to answer questions about finance The bot will reportedly be used for advertising and marketing services, an index of securities values, and online financial information and investment advice. (2)

JPMorgan is actively working on #ChatGPT rival, applies for "IndexGPT" trademark. https://t.co/VUo9eZ1vTP

— Cointelegraph (@Cointelegraph) May 27, 2023

Image Source: Coin Telegraph – JPMorgan’s trademark application for IndexGPT. Source: USPTO

JP Morgan Files Patent for ChatGPT Finance Clone, IndexGPT: Financial giant JPMorgan Chase filed a trademark application for a finance-themed chatbot called IndexGPT earlier this month. According to the application filed on May 11 with the United States Patent and Trademark Office, the chatbot would be used for advertising and marketing services, an index of securities values, and online financial information and investment advice. “AI and the raw material that feeds it, data, will be critical to our company’s future success,” JPMorgan Chase CEO Jamie Dimon said in a letter to shareholders in April. “The importance of implementing new technologies simply cannot be overstated.” In a February survey by JP Morgan, more than half of the institutional traders surveyed said that artificial intelligence and machine learning would be the most influential technology in shaping the future of trading over the next three years.

Source code: https://github.com/imartinez/privateGPT

About: Interact privately with your documents using the power of GPT, 100% privately, no data leaks. Ask questions to your documents without an internet connection, using the power of LLMs. 100% private, no data leaves your execution environment at any point. You can ingest documents and ask questions without an internet connection.

privacyGPT https://t.co/w8XUa6d7nQ No more feeding openai's RLHF out of own interest. (you might feel it's not a big thing, but in the near future it will.) #chatGPT #privacy #PrivacyMatters #privacyGPT

— Felix Peña (@kaisen2350) May 11, 2023

What is a private ChatGPT that interacts with your local documents?: As much as ChatGPT is convenient, it has its tradeoffs. The fact that it requires you to send your data over the internet can be a concern when it comes to privacy, especially if you’re using confidential documents. Additionally, it requires a constant internet connection, which can be an issue in areas with poor connectivity. Fortunately, there is an alternative. You can run your own local large language model (LLM), which puts you in control of your data and privacy. In this article, we will explore how to create a private ChatGPT that interacts with your local documents, giving you a powerful tool for answering questions and generating text without having to rely on OpenAI’s servers. We will also look at PrivateGPT, a project that simplifies the process of creating a private LLM.

By using a local language model and vector database, you can maintain control over your data and ensure privacy while still having access to powerful language processing capabilities. The process may require some technical expertise, but there are many resources available online to help you get started. One solution is PrivateGPT, a project hosted on GitHub that brings together all the components mentioned above in an easy-to-install package. PrivateGPT includes a language model, an embedding model, a database for document embeddings, and a command-line interface. It supports several types of documents including plain text (.txt), comma-separated values (.csv), Word (.docx and .doc), PDF, Markdown (.md), HTML, Epub, and email files (.eml and .msg). (4)

For How to create a private ChatGPT that interacts with your local documents, go to: https://bdtechtalks.com/2023/06/01/create-privategpt-local-llm/

Available at: https://www.notion.so/product/ai

About: Notion AI is a new artificial intelligence feature from the productivity app Notion. Notion AI is designed to help you be more productive by understanding your work habits and providing suggestions on how to improve them.

Notion AI is now available to everyone.

No waitlist, no “limited preview.”

Get started: https://t.co/qKmTw6ieJP pic.twitter.com/JcQra1YbZf

— Notion (@NotionHQ) February 22, 2023

While the AI integrated into the app can write articles from whole cloth (I asked it to write a blog post about the Notion AI announcement, and it spat out 385 words, only some of which were accurate), the company is pitching it more as a “thought partner.” In its announcement post, the company says one of the features alpha testers used the most was asking it to improve text they had written. For example, you can highlight text and ask Notion to rewrite it in a different tone, use simpler language, or simply pad out or cut down a sentence. (5)

Available at: Charlotte AI is currently available in private customer preview.

About: “A new generative AI security analyst that uses the world’s highest-fidelity security data and is continuously improved by a tight feedback loop with CrowdStrike’s industry-leading threat hunters, managed detection and response operators, and incident response experts. Charlotte AI [is the] first offering built using our Charlotte AI engine and will help users of all skill levels improve their ability to stop breaches while reducing security operations complexity. Customers can ask questions in plain English and dozens of other languages to receive intuitive answers from the CrowdStrike Falcon platform.” (6)

CrowdStrike has pioneered the use of artificial intelligence since we first introduced AI-powered protection to replace signature-based antivirus over 10 years ago, and we’ve continued to deeply integrate it across our platform.

🤝 Meet Charlotte AI. https://t.co/mWKH0tcT7e

— CrowdStrike (@CrowdStrike) May 30, 2023

Meet Charlotte, CrowdStrike’s New Generative AI Assistant – Charlotte AI is the latest security-based generative AI assistant to hit the market: CrowdStrike is jumping on the generative artificial intelligence (AI) bandwagon, as the company tests out its own generative AI security assistant, known as Charlotte AI . Charlotte AI is designed to answer such questions as whether a system is vulnerable to a specific vulnerability, and to provide recommended action items, the company said. It can also be prompted to find malicious activity, such as lateral movement across Windows machines. The goal is to provide less experienced IT and security professionals with the information they need about their environments and security posture in order to make better decisions faster.

Example questions include:

In recent months, several companies — Microsoft and Google included — have incorporated generative AI assistants into their security platforms. These assistants offer security analysts a way to query large amounts of security data using natural language and make correlations among different data sources. In this sense, Charlotte AI provides a natural language interface to the Falcon platform so that security analysts, “regardless of experience level or organization size, [can] be a power user of the Falcon platform,” the company said. (7)

Source code: N/A; the various LLMs used for experimentation and research can be found in the resources section of this white paper.

About: FrugalGPT is a variant of the GPT (Generative Pre-trained Transformer) model developed by OpenAI. It is designed to be a more computationally efficient and cost-effective version of GPT with reduced computational requirements. The main idea behind FrugalGPT is to offer a more lightweight and accessible version of the GPT model, making it feasible to deploy and utilize in resource-constrained environments. By reducing the model’s size and computational complexity, FrugalGPT aims to strike a balance between performance and efficiency.

FrugalGPT: How to Use LLMs Cheaply

-LLM cascade learns which combinations of LLMs to use for different queries to reduce cost / improve accuracy

-Matches perf of best individual LLM (GPT-4) w/ 98% cost reduction

-Or improves accuracy by 4% w/ same costhttps://t.co/z9W6E9Ke8o pic.twitter.com/mjYylbNslj— John Nay (@johnjnay) May 10, 2023

FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance: There is a rapidly growing number of large language models (LLMs) that users can query for a fee. We review the cost associated with querying popular LLM APIs, e.g. GPT-4, ChatGPT, J1-Jumbo, and find that these models have heterogeneous pricing structures, with fees that can differ by two orders of magnitude. In particular, using LLMs on large collections of queries and text can be expensive. Motivated by this, we outline and discuss three types of strategies that users can exploit to reduce the inference cost associated with using LLMs: 1) prompt adaptation, 2) LLM approximation, and 3) LLM cascade. As an example, we propose FrugalGPT, a simple yet flexible instantiation of LLM cascade which learns which combinations of LLMs to use for different queries in order to reduce cost and improve accuracy. Our experiments show that FrugalGPT can match the performance of the best individual LLM (e.g. GPT-4) with up to 98% cost reduction or improve the accuracy over GPT-4 by 4% with the same cost. The ideas and findings presented here lay a foundation for using LLMs sustainably and efficiently. (8)

Available at: Slack describes many use cases and developer tools at https://slack.com/blog/news/introducing-slack-gpt

About: Slack is integrating a “conversational AI experience” that lets users automate and customize work processes, the company said Thursday. Slack GPT will include native AI capabilities aimed at specific work functions, such as sales, marketing, and IT. Other features, including the ChatGPT app for Slack, are available under beta or will launch this summer.

Salesforce today announced SlackGPT, a new generative AI-powered experience planned for the Slack app, to be released soon. https://t.co/Oep5K8yIGu

— VentureBeat (@VentureBeat) May 4, 2023

Slack GPT arrives to automate core communication workflows: The new capabilities will let customers build no-code workflows that embed AI actions with simple prompts, according to Ali Rayl, SVP of product at Slack. The list of providers integrating AI functions into existing products is long and expanding. Slack joins companies such as Zoom, Atlassian, Stack Overflow and the three largest hyperscalers. Microsoft and Google also equipped their efficiency suites with generative AI capabilities. In March, Slack introduced a ChatGPT app, available to users in beta. But today’s announcement brings generative AI closer to how users interact with Slack, according to Rayl.

“What we’re talking about going forward is the native integrations with different Slack product surfaces,” Rayl said. This includes channel summaries, huddle transcripts, a text creation tool called Canvas and no-code creation of workflows. Slack GPT will let users integrate a language model of choice, such as OpenAI’s GPT, Anthropic’s Claude “or, in the future, Salesforce’s proprietary LLM,” Rayl said. As part of the Slack GPT experience, users will have access to Einstein GPT app for Slack, a conversational interface to connect the collaboration platform with Salesforce’s Customer 360 system.

The new features will allow users to customize the generative AI tool kit for specific functions. Workers in customer service will get access to AI-generated solutions and responses, for example. They will also be able to auto-generate case summaries to share in channels and canvases. Developers and IT workers using the features could automatically scan channel activities and summarize root cause analysis to improve incident management.

Despite broad interest surrounding generative AI, questions remain related to data privacy as the success of the systems hinges on the data they ingest. In an emailed statement, Slack said all apps in its directory undergo a thorough review before public distribution. “Slack GPT is powered by Slack’s secure platform, which offers a variety of settings and controls so that our customers can make right decisions for their own security and compliance needs,” the company said in an emailed statement. “This includes allowing admins to implement an app approval process so no app can be installed without their permission.” (9)

Available at: For more information, visit Bloomberg.com/company or request a demo.

About: “Bloomberg…released a research paper detailing the development of BloombergGPTTM, a new large-scale generative artificial intelligence (AI) model. This large language model (LLM) has been specifically trained on a wide range of financial data to support a diverse set of natural language processing (NLP) tasks within the financial industry.” (10)

ChatGPT is impressive but industry needs more specialized tools. Enter #BloombergGPT, bringing AI to Wall Street and the world of finance. @JHUCompSci's @mdredze talks about his work on the project and the future of domain-specific language models:https://t.co/VhsBxaHdAF

— Johns Hopkins Engineering (@HopkinsEngineer) June 1, 2023

Introducing BloombergGPT, Bloomberg’s 50-billion parameter large language model, purpose-built from scratch for finance: For more than a decade, Bloomberg has been a trailblazer in its application of AI, Machine Learning, and NLP in finance. Today, Bloomberg supports a very large and diverse set of NLP tasks that will benefit from a new finance-aware language model. Bloomberg researchers pioneered a mixed approach that combines both finance data with general-purpose datasets to train a model that achieves best-in-class results on financial benchmarks, while also maintaining competitive performance on general-purpose LLM benchmarks.

To achieve this milestone, Bloomberg’s ML Product and Research group collaborated with the firm’s AI Engineering team to construct one of the largest domain-specific datasets yet, drawing on the company’s existing data creation, collection, and curation resources. As a financial data company, Bloomberg’s data analysts have collected and maintained financial language documents over the span of forty years. The team pulled from this extensive archive of financial data to create a comprehensive 363 billion token dataset consisting of English financial documents.

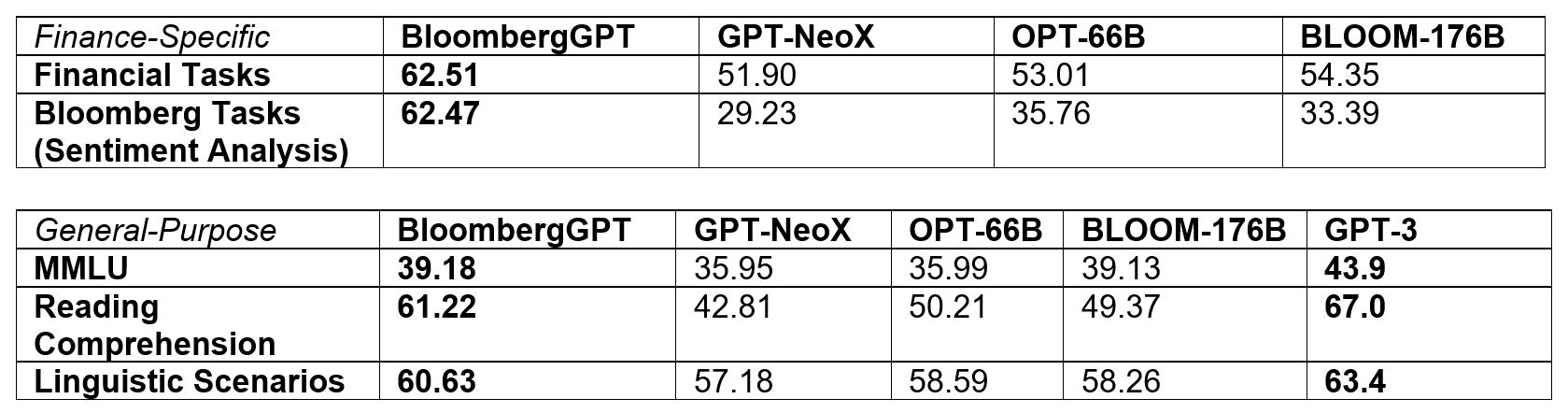

This data was augmented with a 345 billion token public dataset to create a large training corpus with over 700 billion tokens. Using a portion of this training corpus, the team trained a 50-billion parameter decoder-only causal language model. The resulting model was validated on existing finance-specific NLP benchmarks, a suite of Bloomberg internal benchmarks, and broad categories of general-purpose NLP tasks from popular benchmarks (e.g., BIG-bench Hard, Knowledge Assessments, Reading Comprehension, and Linguistic Tasks). Notably, the BloombergGPT model outperforms existing open models of a similar size on financial tasks by large margins, while still performing on par or better on general NLP benchmarks.

Table 1. How BloombergGPT performs across two broad categories of NLP tasks: finance-specific and general-purpose. (10)

BloombergGPT: A Large Language Model for Finance: The use of NLP in the realm of financial technology is broad and complex, with applications ranging from sentiment analysis and named entity recognition to question answering. Large Language Models (LLMs) have been shown to be effective on a variety of tasks; however, no LLM specialized for the financial domain has been reported in literature. In this work, we present BloombergGPT, a 50 billion parameter language model that is trained on a wide range of financial data. We construct a 363 billion token dataset based on Bloomberg’s extensive data sources, perhaps the largest domain-specific dataset yet, augmented with 345 billion tokens from general-purpose datasets. We validate BloombergGPT on standard LLM benchmarks, open financial benchmarks, and a suite of internal benchmarks that most accurately reflect our intended usage. Our mixed dataset training leads to a model that outperforms existing models on financial tasks by significant margins without sacrificing performance on general LLM benchmarks. Additionally, we explain our modeling choices, training process, and evaluation methodology. We release Training Chronicles (Appendix C) detailing our experience in training BloombergGPT. (11)

This ChatGPT-inspired large language model speaks fluent finance: Mark Dredze, an associate professor of computer science at Johns Hopkins University’s Whiting School of Engineering and visiting researcher at Bloomberg, was part of the team that created [bloombergGPT]. Dredze is also the inaugural director of research (Foundations of AI) in the new AI-X Foundry at Johns Hopkins. The Hub spoke with Dredze about BloombergGPT and its broader implications for AI research at Johns Hopkins.

What were the goals of the BloombergGPT project?

Many people have seen ChatGPT and other large language models, which are impressive new artificial intelligence technologies with tremendous capabilities for processing language and responding to people’s requests. The potential for these models to transform society is clear. To date, most models are focused on general-purpose use cases. However, we also need domain-specific models that understand the complexities and nuances of a particular domain. While ChatGPT is impressive for many uses, we need specialized models for medicine, science, and many other domains. It’s not clear what the best strategy is for building these models.

In collaboration with Bloomberg, we explored this question by building an English language model for the financial domain. We took a novel approach and built a massive dataset of financial-related text and combined it with an equally large dataset of general-purpose text. The resulting dataset was about 700 billion tokens, which is about 30 times the size of all the text in Wikipedia.

We trained a new model on this combined dataset and tested it across a range of language tasks on finance documents. We found that BloombergGPT outperforms—by large margins!—existing models of a similar size on financial tasks. Surprisingly, the model still performed on par on general-purpose benchmarks, even though we had aimed to build a domain-specific model.

Why does finance need its own language model?

While recent advances in AI models have demonstrated exciting new applications for many domains, the complexity and unique terminology of the financial domain warrant a domain-specific model. It’s not unlike other specialized domains, like medicine, which contain vocabulary you don’t see in general-purpose text. A finance-specific model will be able to improve existing financial NLP tasks, such as sentiment analysis, named entity recognition, news classification, and question answering, among others. However, we also expect that domain-specific models will unlock new opportunities.

For example, we envision BloombergGPT transforming natural language queries from financial professionals into valid Bloomberg Query Language, or BQL, an incredibly powerful tool that enables financial professionals to quickly pinpoint and interact with data about different classes of securities. So if the user asks: “Get me the last price and market cap for Apple,” the system will return get(px_last,cur_mkt_cap) for([‘AAPL US Equity’]). This string of code will enable them to import the resulting data quickly and easily into data science and portfolio management tools.

What did you learn while building the new model?

Building these models isn’t easy, and there are a tremendous number of details you need to get right to make them work. We learned a lot from reading papers from other research groups who built language models. To contribute back to the community, we wrote a paper with over 70 pages detailing how we built our dataset, the choices that went into the model architecture, how we trained the model, and an extensive evaluation of the resulting model. We also released detailed “training chronicles” that contains a narrative description of the model-training process. Our goal is to be as open as possible about how we built the model to support other research groups who may be seeking to build their own models. (12)

Auto-GPT, short for Automatic Generative Pre-trained Transformer, is an automated approach to training and optimizing the GPT (Generative Pre-trained Transformer) model. GPT is a highly advanced language model developed by OpenAI that excels at generating coherent and contextually relevant text. Auto-GPT takes this model further by automating the process of fine-tuning and enhancing its performance.

The primary objective of auto-GPT is to improve the efficiency and effectiveness of the GPT model through automated techniques. It accomplishes this by employing methods such as neural architecture search (NAS) and reinforcement learning (RL).

The process of auto-GPT involves several steps:

By automating the training and optimization process, auto-GPT accelerates experimentation and optimization, leading to enhanced performance of the GPT model in specific natural language processing (NLP) tasks. It eliminates much of the manual effort and guesswork involved in finding the optimal model configurations, making it a valuable tool for researchers and developers working with language generation and understanding tasks.

FrugalGPT is an approach that aims to reduce the computational requirements and resource consumption of the GPT (Generative Pre-trained Transformer) model while maintaining a reasonable level of performance. Here’s a general overview of how FrugalGPT works:

The specific techniques and approaches used in FrugalGPT may vary, and different implementations may employ additional methods to achieve computational efficiency. The goal is to find a balance between model size, computational requirements, and performance, enabling the deployment of GPT-based models in resource-constrained environments. It’s important to note that my knowledge is based on information available up until September 2021, and there may have been further developments or advancements related to FrugalGPT since then.

s of my knowledge cutoff in September 2021, JP Morgan Chase is known to be one of the leading financial institutions that has been actively investing in and implementing artificial intelligence (AI) and machine learning (ML) technologies within the financial services sector. While I cannot provide real-time updates, I can provide an overview of some of JP Morgan’s notable initiatives up until that time:

It’s important to note that the financial services sector is constantly evolving, and new developments in AI and ML are likely to emerge. For the most up-to-date and comprehensive information on JP Morgan’s AI and ML implementations, it is recommended to refer to the latest publications, reports.

Crowdstrike is recognized as a leader in the technology industry, particularly in the field of cybersecurity. While it is not specifically focused on the Technology, Media, and Telecommunications (TMT) sector, Crowdstrike has made significant advancements in the application of artificial intelligence (AI) and machine learning (ML) for cybersecurity purposes. Here are some reasons why Crowdstrike is considered a prominent player in AI and ML implementation for cybersecurity:

While Crowdstrike’s primary focus is cybersecurity, its AI and ML-driven approach has garnered recognition and positioned the company as a leader in the industry. It’s important to note that the TMT sector encompasses a wide range of companies and applications, and there may be other players in the sector leveraging AI and ML in different ways. Evaluating leadership in AI and ML implementation within the TMT sector may require considering specific sub-industries or use cases within the broader sector.

Yes, Crowdstrike is widely recognized as a leader in the use of artificial intelligence (AI) and machine learning (ML) in the field of cybersecurity. Crowdstrike’s approach involves leveraging AI and ML technologies to detect and respond to cyber threats more effectively. Here are some reasons why Crowdstrike is considered a leader in this domain:

Crowdstrike’s innovative use of AI and ML in cybersecurity has earned them recognition and market leadership. However, it’s important to note that the cybersecurity landscape is dynamic, and other companies in the field may also employ AI and ML technologies to varying degrees. Evaluating leadership in this space may involve considering multiple factors and industry assessments.

Notion and Slack are both popular productivity tools that serve different purposes and offer distinct features. Here’s a comparison of their key characteristics:

Notion:

Slack:

In summary, Notion is known for its versatility in note-taking, documentation, and knowledge management, while Slack excels in real-time communication and collaboration. Notion provides a comprehensive platform for organizing information and managing tasks, while Slack is more focused on immediate communication and integration with external tools. The choice between the two depends on the specific needs and preferences of the user or team. In some cases, they can complement each other, with Notion serving as a knowledge base and documentation tool, while Slack handles real-time communication.

Both Notion and Slack incorporate elements of artificial intelligence (AI) in their platforms, but their use of AI differs in focus and application. Here’s a comparison of how AI is utilized in Notion and Slack:

Notion:

Slack:

In terms of AI utilization, Notion focuses on providing AI-powered content suggestions, assisting in knowledge base organization, and automating data extraction. Slack, on the other hand, emphasizes AI integration through chatbots, intelligent search, and smart notifications.

It’s important to note that the depth and extent of AI integration may vary over time as both platforms continue to evolve and introduce new features. Evaluating which platform has a better use of AI ultimately depends on specific use cases and individual preferences.