Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

Like the famous political campaign axiom: “It’s the economy, stupid”, so goes a variation on the phrase that can be applied to the ongoing discussion about large language (LLM) model training, the development of the metaverse, AI, and crypto mining: “It’s the physical layer, stupid.” APIs, the application layer, and the cloud? All good – but not as ephemeral and “cloud-like” as it is all made out to be sometimes. In the end, there is a physical infrastructure running it all, and the current innovation at the physical layer – in order to build the future – is as interesting now as anything the future holds.

Geek out with us on these case studies from the current physical layer of GPT model training, crypto mining, AI, and metaverse innovation:

“It’s too expensive, just plain too expensive…you’re seeing graduate students trying to fit [LLMs] on laptop CPUs.”

Sally Ward Reports from the EETimes:

Cerebras has open-sourced seven trained GPT-class large language models (LLMs), ranging in size from 111 million to 13 billion parameters, for use in research or commercial projects without royalties, Cerebras CEO Andrew Feldman told EE Times. The models were trained in a matter of weeks on Cerebras CS-2 wafer-scale systems in its Andromeda AI supercomputer.

GPT-class models are notoriously large: GPT-4, which powers ChatGPT, has 175 billion parameters. Training these models is therefore limited to the small number of companies that can afford it, and it takes many months. The pre-trained GPT-class models offered by Cerebras may be fine-tuned with a “modest amount” of custom data to make an industry-specific LLM requiring a relatively small amount of compute by comparison.

“I think if we’re not careful, we end up in this situation where a small handful of companies holds the keys to large language models,” Feldman said. “GPT-4 is a black box, and Llama is closed to for-profit organizations.”

It isn’t just companies smaller than OpenAI and DeepMind that are not able to afford the compute required; many fields of academia are also locked out.

“It’s too expensive, just plain too expensive,” Feldman said. “Conversely, in some of the most interesting work, necessity is driving innovation. … You’re seeing graduate students trying to fit [LLMs] on laptop CPUs, and you’re seeing all sorts of enormous creativity in an effort to do what they can with the resources that are available to them.”

Cerebras has some CS-2 systems available in the cloud for academic use through certain programs, as well as some at the Pittsburgh Supercomputing Center and in Argonne National Labs’ sandbox, he said.

The trained models Cerebras has released, available under the permissive Apache 2.0 license, have been downloaded more than 200,000 times from HuggingFace at the time of writing (about two weeks after release). They are trained on the public PILE dataset from Eleuther.

Training seven models of different sizes allowed Cerebras to derive a scaling law linking the performance of the model (prediction accuracy) to the amount of compute required for training. This will allow the forecasting of model performance based on training budgets. While other companies have published scaling laws, this is the first using a public dataset, the company said.

Cerebras was able to train these models in a few weeks on Andromeda, its 16-node CS-2 supercomputer, as there was no effort required to partition models across smaller chips, Feldman said.

Cerebras was able to train these models in a few weeks on Andromeda, its 16-node CS-2 supercomputer, as there was no effort required to partition models across smaller chips, Feldman said.

Distributing training workloads across multi-chip systems can be a difficult task. Training on multi-chip systems typically uses data parallelism, wherein copies of the model are trained on subsets of the data, which is sufficient for relatively small models. Once models get to about 2.5 billion parameters, data parallelism alone isn’t enough: The model needs to be broken up into chunks, with layers running on different chips. This is called tensor model parallelism. Above about 20 billion parameters, pipelined model parallelism applies, which is when single layers are too big for a single chip and need to be broken up. Feldman pointed out that training OpenAI’s ChatGPT took a team of 35 people to break up the training work and spread it over the GPUs they were using.

“Our work took one person,” he said. “Our wafer is big enough that we never need to break up the work, and because we use the weight-streaming architecture that holds parameters off-chip, we never need to break up the parameters and spread them across chips. As a result, we can train very, very large models.”

Sticking to a strictly data-parallel approach even for very large models makes training much simpler overall, he said.

Will there be a point at which models become so large that it will be too complex to train them on multi-chip systems?

“There is an upper bound on how big a cluster one can make, because at some point, the taxes of distributing compute overwhelm the gains in compute, [but] I don’t think the parameter counts are going to keep getting bigger. … There’s a tradeoff between model size and the amount of data,” he said, referring to Meta’s work on Llama, which showed that smaller models trained on more data are easier to retrain and fine-tune.

“If you keep growing the parameters … the models are so big, they’re difficult and awkward to work with,” he said. “I think what you’re going to see is a great deal of work on better data, cleaner data.” (1)

Check out Cerebras-GPT on Hugging Face: https://lnkd.in/d6RM7M_y

Cerebras-GPT White Paper: Open Compute-Optimal Language Models Trained on the Cerebras Wafer-Scale Cluster

Came in late, leaving too early.

From Paul Alcorn at tom’s Hardware:

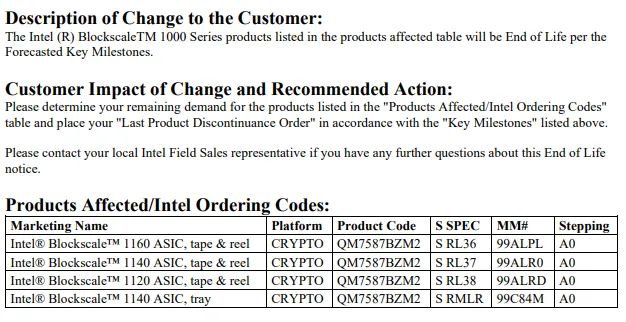

It’s been just a year since Intel officially announced its Bitcoin-mining Blockscale ASICs, but [this week] the company announced the end of life of its first-gen Blockscale 1000-series chips without announcing any follow-up generations of the chips. We spoke with Intel on the matter, and the company told Tom’s Hardware that “As we prioritize our investments in IDM 2.0, we have end-of-lifed the Intel Blockscale 1000 Series ASIC while we continue to support our Blockscale customers.”

Intel’s statement cites the company’s tighter focus on its IDM 2.0 operations as the reason for ending the Blockscale ASICs, a frequent refrain in many of its statements as it has exited several businesses amid company-wide belt-tightening. We also asked Intel if it planned to exit the Bitcoin ASIC business entirely, but the company responded, “We continue to monitor market opportunities.”

In the original announcement that the company would enter the blockchain market, then-graphics-chief Raja Koduri noted that the company had created a Custom Compute Group within the AXG graphics unit to support the Bitcoin ASICs and “additional emerging technology.” However, Intel recently restructured the AXG group, and Koduri left the company shortly thereafter. We asked Intel about the fate of the Custom Compute Group, but it says it has no organizational changes to share at this time.

Image Credit: Intel

Intel hasn’t announced any next-gen Bitcoin mining products and its Blockscale ASIC landing pages are now all inactive, but its statement implies that it is leaving the door open for future opportunities if they arise. Intel’s initial entry into the market for bitcoin-mining chips came at an inopportune time, as its chips finally became available right as Bitcoin valuations crashed at the end of the last crypto craze, and Intel’s apparent exit from the market comes as Bitcoin is back on the upswing — it recently cleared $30,000 for the first time in nearly a year.

Intel’s Bitcoin-mining chips initially came into the public eye under the Bonanza Mine codename it used for its R&D chips that were never commercialized, but the company later announced it would enter the blockchain market and summarily launched a second-gen model named ‘Blockscale” to select large-scale mining companies like BLOCK, GRIID Infrastructure, and Argo Blockchain, among others.

Aside from exceptionally competitive performance relative to competing Bitcoin-mining chips, Blockscale’s big value prop stemmed from the stability of Intel’s chip-fabbing resources. Several large industrial mining companies signed large long-term deals for a steady supply of Blockscale ASICs, thus circumventing the volatility with the mostly China-based manufacturers that engaged in wild pricing manipulations based on Bitcoin valuations, were subject to tariffs, and suffered from supply disruptions and shortages, not to mention the increased costs of logistics and shipping from China.

Intel hasn’t announced any next-gen Bitcoin mining products and its Blockscale ASIC landing pages are now all inactive, but its statement implies that it is leaving the door open for future opportunities if they arise. Intel’s initial entry into the market for bitcoin-mining chips came at an inopportune time, as its chips finally became available right as Bitcoin valuations crashed at the end of the last crypto craze, and Intel’s apparent exit from the market comes as Bitcoin is back on the upswing — it recently cleared $30,000 for the first time in nearly a year.

Intel’s Bitcoin-mining chips initially came into the public eye under the Bonanza Mine codename it used for its R&D chips that were never commercialized, but the company later announced it would enter the blockchain market and summarily launched a second-gen model named ‘Blockscale” to select large-scale mining companies like BLOCK, GRIID Infrastructure, and Argo Blockchain, among others.

Aside from exceptionally competitive performance relative to competing Bitcoin-mining chips, Blockscale’s big value prop stemmed from the stability of Intel’s chip-fabbing resources. Several large industrial mining companies signed large long-term deals for a steady supply of Blockscale ASICs, thus circumventing the volatility with the mostly China-based manufacturers that engaged in wild pricing manipulations based on Bitcoin valuations, were subject to tariffs, and suffered from supply disruptions and shortages, not to mention the increased costs of logistics and shipping from China.

Intel tells us that it will continue to serve its existing Boockscale customers, implying it will satisfy its existing long-term contracts. Intel’s customers have until October 2023 to order new chips, and shipments will end in April 2024. Meanwhile, Intel has scrubbed nearly all of the landing and product pages for the Blockscale chips from its website.

Intel’s latest move comes on the heels of a cost-cutting spree — the company sold off its server-building business last week, killed off its networking switch business, ended its 5G modems, wound down its Optane Memory production, jettisoned the company’s drone business and sold its SSD storage unit to SK Hynix.

Intel’s cost-cutting also applies to numerous other projects, as Intel has also shelved plans for a mega-lab in Oregon and canceled its planned development center in Haifa. The company has also trimmed some programs, like its RISC-V pathfinder program, and streamlined its data center graphics roadmap by axing the Rialto Bridge GPUs and delaying its Falcon Shores chips to 2025.

It’s unclear if Intel will continue to make further cuts to its far-flung businesses, but it is clear that the company is committed to slimming down to increase its focus on its core competencies as it weathers some of the worst market conditions in decades, not to mention intense competition from multiple foes. (2)

Microsoft has been secretly developing the chips since 2019.

Baba Tamim at Interesting Engineering reports:

Microsoft is reportedly working on its own AI chips to train complex language models. The move is thought to be intended to free the corporation from reliance on Nvidia chips, which are in high demand.

Select Microsoft and OpenAI staff members have been granted access to the chips to verify their functionality, The Information reported on Tuesday.

“Microsoft has another secret weapon in its arsenal: its own artificial intelligence chip for powering the large-language models responsible for understanding and generating humanlike language,” read The Information article.

Since 2019, Microsoft has been secretly developing the chips, and that same year the Redmond, Washington-based tech giant also made its first investment in OpenAI, the company behind the sensational ChatGPT chatbot.

Nvidia is presently the main provider of AI server chips, and businesses are scrambling to buy them in order to use AI software. For the commercialization of ChatGPT, it is predicted that OpenAI would need more than 30,000 of Nvidia’s A100 GPUs.

While Nvidia tries to meet demand, Microsoft wants to develop its own AI chips. The corporation is apparently speeding up work on the project, code-named “Athena“.

Microsoft intends to make its AI chips widely available to Microsoft and OpenAI as early as next year, though it hasn’t yet said if it will make them available to Azure cloud users, noted The Information.

The chips are not meant to replace Nvidia’s, but if Microsoft continues to roll out AI-powered capabilities in Bing, Office programs, GitHub, and other services, they could drastically reduce prices.

Bloomberg reported in late 2020 that Microsoft was considering developing its own ARM-based processors for servers and possibly even a future Surface device. Microsoft has been working on its own ARM-based chips for some years.

Although these chips haven’t yet been made available, Microsoft has collaborated with AMD and Qualcomm to develop specialized CPUs for its Surface Laptop and Surface Pro X devices.

The news sees Microsoft join the list of tech behemoths with their own internal AI chips, which already includes the likes of Amazon, Google, and Meta. However, most companies still rely on the use of Nvidia chips to power their most recent large language models.

The most cutting-edge graphics cards from Nvidia are going for more than $40,000 on eBay as demand for the chips used to develop and use artificial intelligence software increases, CNBC reported last week.

The A100, a nearly $10,000 processor that has been dubbed the “workhorse” for AI applications, was replaced by the H100, which Nvidia unveiled last year. (3)

“Meta and Qualcomm are teaming up to develop custom chipsets for virtual reality products, the companies announced on Friday.

The two U.S. technology giants have signed a multi-year agreement “to collaborate on a new era of spatial computing,” using Qualcomm’s “extended reality” (XR) Snapdragon technology. Extended reality refers to technologies including virtual and augmented reality, which merge the physical and digital worl.

The Quest products are Meta’s line of virtual reality headsets. The Meta Quest 2 headset currently uses Qualcomm’s Snapdragon XR2 chipset.

Since its rebrand in 2021, Facebook-parent Meta has staked its future on the metaverse — a term that encompasses virtual and augmented reality technology — with the aim of having people working and playing in digital worlds in the near future.

In the smartphone field, companies ranging from Apple to Samsung have designed their own custom processors to differentiate from competitors and create better products than they might have using off-the-shelf chips.

A focus on custom chips by Meta makes sense as it looks to differentiate its headsets and possibly create unique experiences for users.

“Unlike mobile phones, building virtual reality brings novel, multi-dimensional challenges in spatial computing, cost, and form factor,” Zuckerberg said. “These chipsets will help us keep pushing virtual reality to its limits and deliver awesome experiences.”

The length of the deal between the companies and the financial terms of the deal were not disclosed.

It comes as Meta gears up to launch a new virtual reality headset in October, even as losses widened in its Reality Labs division, which includes its VR business, in the second quarter of the year.” (4)

For a more detailed report of this development, see Meta Partners With Qualcomm To Develop Customized Chips for the Metaverse.