Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

AI Transparency is a concept centered on openness, comprehensibility, and accountability in technological systems. In the context of AI, transparency refers to the extent to which the inner workings and decision-making processes of AI systems are made accessible and understandable to users and other stakeholders. This includes insights into the data training, algorithms employed, and the rationale behind specific decisions made by AI systems. The goal is to ensure these systems can be scrutinized and trusted, especially when their decisions significantly impact individuals and society.

Two new reports have been released to evolve the early conceptual phase of “AI Transparency” into a structural implementation that is eventually fully operational. The Harvard Kennedy School’s Shorenstein Center on Media, Politics, and Public Policy and Microsoft’s Corporate Social Responsibility (CSR) thought leadership and research efforts released these recent reports, “Documentation Framework for AI Transparency” and a “2024 Responsible AI Transparency Report”, respectively.

The following is a definitive overview of AI Transparency – based on the sources and research that have informed our research to date – on this critical ethics, governance, risk, and business model innovation issue, or as a research team at Stanford put it “to better understand the new status quo for transparency…and areas of sustained and systemic opacity for foundation models.”

In February of 2023, in Computer, the flagship publication of the IEEE Computer Society, Swedish researchers proposed a three-level AI transparency framework: “The concept of transparency is fragmented in artificial intelligence (AI) research, often limited to the transparency of the algorithm alone. We propose that AI transparency operates on three levels—algorithmic, interaction, and social—all of which need to be considered to build trust in AI. We expand upon these levels using current research directions, and identify research gaps resulting from the conceptual fragmentation of AI transparency highlighted within the context of the three levels.:

A new index rates the transparency of 10 foundation model companies and finds them lacking.

Stanford’s Institute for Human-Centered AI (HAI), Center for Research on Foundation Models (CRFM), within Stanford HAI, in the Fall of 2023, released the inaugural CRFM Foundation Model Transparency Index. From the HAI announcement of the release of the index:

Companies in the foundation model space are becoming less transparent, says Rishi Bommasani, Society Lead at the Center for Research on Foundation Models (CRFM), within Stanford HAI. For example, OpenAI, which has the word “open” right in its name, has clearly stated that it will not be transparent about most aspects of its flagship model, GPT-4.

Less transparency makes it harder for other businesses to know if they can safely build applications that rely on commercial foundation models; for academics to rely on commercial foundation models for research; for policymakers to design meaningful policies to rein in this powerful technology; and for consumers to understand model limitations or seek redress for harms caused.

To assess transparency, Bommasani and CRFM Director Percy Liang brought together a multidisciplinary team from Stanford, MIT, and Princeton to design a scoring system called the Foundation Model Transparency Index. The FMTI evaluates 100 different aspects of transparency, from how a company builds a foundation model, how it works, and how it is used downstream.

When the team scored 10 major foundation model companies using their 100-point index, they found plenty of room for improvement: The highest scores, which ranged from 47 to 54, aren’t worth crowing about, while the lowest score bottoms out at 12. “This is a pretty clear indication of how these companies compare to their competitors, and we hope will motivate them to improve their transparency,” Bommasani says.

Another hope is that the FMTI will guide policymakers toward effectively regulating foundation models. “For many policymakers in the EU as well as in the U.S., the U.K., China, Canada, the G7, and a wide range of other governments, transparency is a major policy priority,” Bommasani says.

The index, accompanied by an extensive 100-page paper on the methodology and results, makes available all of the data on the 100 indicators of transparency, the protocol use for scoring, and the developers’ scores along with justifications.

“To policymakers, transparency is a precondition for other policy efforts.”

A lack of transparency has long been a problem for consumers of digital technologies, Bommasani notes. We’ve seen deceptive ads and pricing across the internet, unclear wage practices in ride-sharing, dark patterns tricking users into unknowing purchases, and myriad transparency issues around content moderation that have led to a vast ecosystem of mis- and disinformation on social media. As transparency around commercial FMs wanes, we face similar sorts of threats to consumer protection, he says.

In addition, transparency around commercial foundation models matters for advancing AI policy initiatives and ensuring that upstream and downstream users in industry and academia have the information they need to work with these models and make informed decisions, Liang says.

Foundation models are an increasing focus of AI research and adjacent scientific fields, including in the social sciences, says Shayne Longpre, a PhD candidate at MIT: “As AI technologies rapidly evolve and are rapidly adopted across industries, it is particularly important for journalists and scientists to understand their designs, and in particular the raw ingredients, or data, that powers them.”

To policymakers, transparency is a precondition for other policy efforts. Foundation models raise substantive questions involving intellectual property, labor practices, energy use, and bias, Bommasani says. “If you don’t have transparency, regulators can’t even pose the right questions, let alone take action in these areas.”

And then there’s the public. As the end-users of AI systems, Bommasani says, they need to know what foundation models these systems depend on, how to report harms caused by a system, and how to seek redress.

“A comprehensive assessment of the transparency of foundation model developers.”

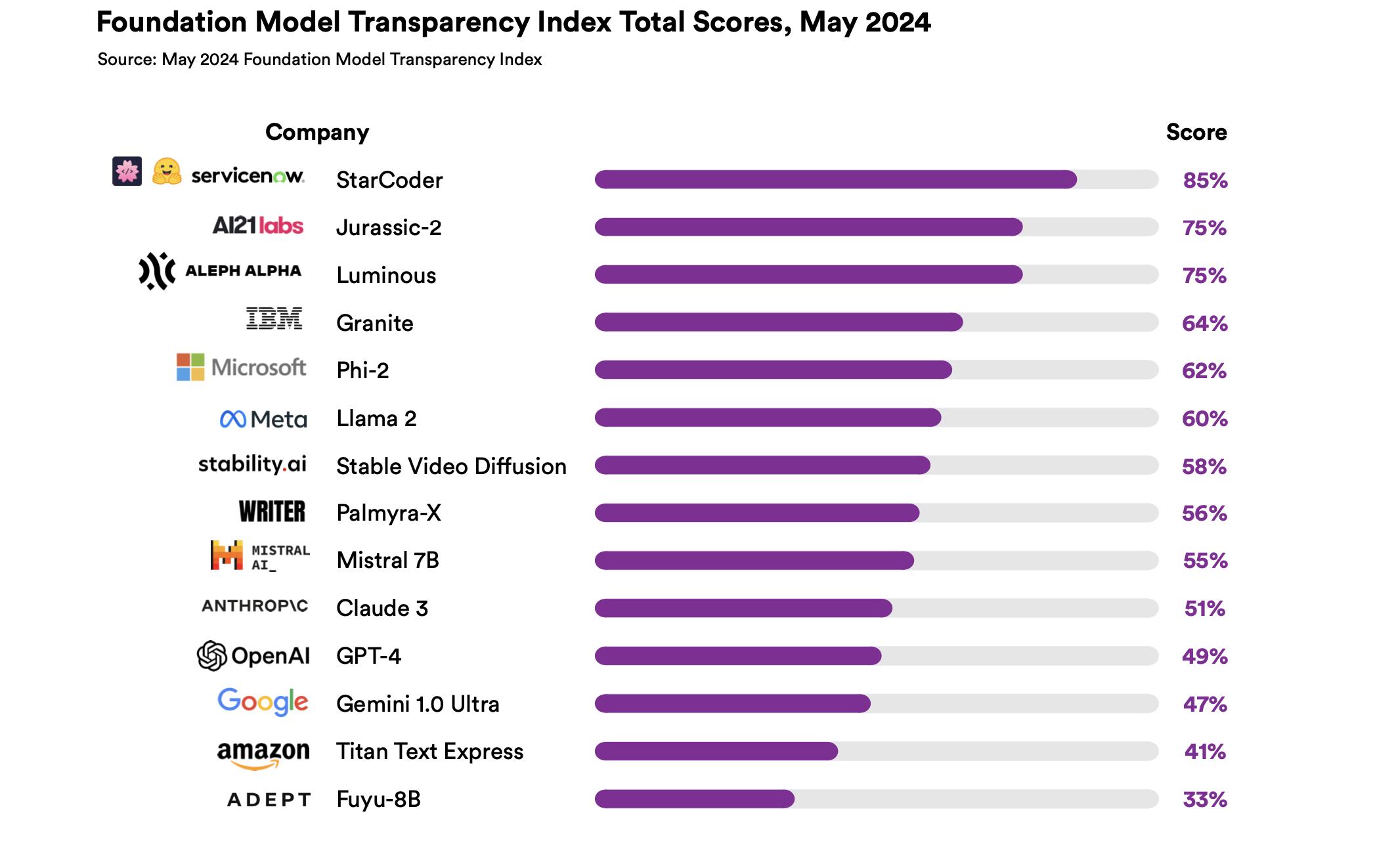

Context. The October 2023 Foundation Model Transparency Index scored 10 major foundation developers like Google and OpenAI for their transparency on 100 transparency indicators. The Index showed developers are generally quite opaque: the average score was a 37 and the top score was a 54 out of 100.

Design. To understand how the landscape has changed, we conduct a follow up 6 months later, scoring developers on the same 100 indicators. In contrast to the October 2023 FMTI, we request that developers submit transparency reports to affirmatively disclose their practices for each of the 100 indicators.

Execution. For the May 2024 Index, 14 developers submitted transparency reports that they have validated and approved for public release. Given their disclosures, we have scored each developer to better understand the new status quo for transparency, changes from October 2023, and areas of sustained and systemic opacity for foundation models.

Image Source: Stanford HAI CRFM

Stanford HAI researchers also frame the role of transparency in AI policy implementation and effectiveness:

“January 28, 2024 was a milestone in U.S. efforts to harness and govern AI. It marked 90 days since President Biden signed Executive Order 14110 on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (“the AI EO”). The government is moving swiftly on implementation with a level of transparency not seen for prior AI-related EOs. A White House Fact Sheet documents actions made to date, a welcome departure from prior opacity on implementation. The scope and breadth of the federal government’s progress on the AI EO is a success for the Biden administration and the United States, and it also demonstrates the importance of our research on the need for leadership, clarity, and accountability.

Today, we announce the first update of our Safe, Secure, and Trustworthy AI EO Tracker —a detailed, line item-level tracker created to follow the federal government’s implementation of the AI EO’s 150 requirements.

View the Safe, Secure, and Trustworth AI EO Tracker

In 2016, our Chairman and CEO, Satya Nadella, set us on a clear course to adopt a principled and human-centered approach to our investments in Artificial Intelligence

(AI). Since then, we have been hard at work to build products that align with our values. As we design, build, and release AI products, six values–– transparency, accountability, fairness, inclusiveness, reliability and safety, and privacy and security––remain our foundation and guide our work every day.

To advance our transparency practices, in July 2023, we committed to publishing an annual report on our responsible AI program, taking a step that reached beyond the White House Voluntary Commitments that we and other leading AI companies agreed to. This is our inaugural report delivering on that commitment, and we are pleased to publish it on the heels of our first year of bringing generative AI products and experiences to creators, non-profits, governments, and enterprises worldwide.

In this inaugural annual report, we provide insight into how we:

First, we provide insights into our development process, exploring how we map, measure, and manage generative AI risks. Next, we offer case studies to illustrate how we apply our policies and processes to generative AI releases. We also share details about how we empower our customers as they build their own AI applications responsibly. Lastly, we highlight how the growth of our responsible AI community, our efforts to democratize the benefits of AI, and our work to facilitate AI research benefit society at large.

There is no finish line for responsible AI. While this report doesn’t have all the answers, we are committed to sharing our learnings early and often and engaging in a robust dialogue around responsible AI practices. We invite the public, private organizations, non-profits, and governing bodies to use this first transparency report to accelerate the incredible momentum in responsible AI we’re already seeing around the world.

In this report, we share how we build generative applications responsibly, how we make decisions about releasing our generative applications, how we support our customers as they build their own AI applications, and how we learn and evolve our responsible AI program. These investments, internal and external, continue to move us toward our goal— developing and deploying safe, secure, and trustworthy AI applications that empower people. We created a new approach for governing generative AI releases, which builds on our Responsible AI Standard and the National Institute of Standards and Technology’s AI Risk Management Framework.

This approach requires teams to map, measure, and manage risks for generative applications throughout their development cycle.

We’ve launched 30 responsible AI tools that include more than 100 features to support customers’ responsible AI development.

We’ve published 33 Transparency Notes since 2019 to provide customers with detailed information about our platform services like Azure OpenAI Service.

We continue to participate in and learn from a variety of multi-stakeholder engagements in the broader responsible AI ecosystem, including the Frontier Model Forum, the Partnership on AI, MITRE, and the National Institute of Standards and Technology.

We support AI research initiatives such as the National AI Research Resource and fund our own Accelerating Foundation Models Research and AI & Society Fellows programs. Our 24 Microsoft Research AI & Society Fellows represent countries in North America, Eastern Africa, Australia, Asia, and Europe.

In the second half of 2023, we grew our responsible AI community from 350 members to over 400 members, a 16.6 percent increase.

We’ve invested in mandatory training for all employees to increase the adoption of responsible AI practices. As of December 31, 2023, 99 percent of employees completed the responsible AI module in our annual Standards of Business Conduct training.

Image Source: Microsoft

For the full Microsoft CSR 2024 Responsible AI Transparency Report, go to this link.

A new report published out of Harvard Kennedy School’s Shorenstein Center on Media, Politics and Public Policy provides a framework to help AI practitioners and policymakers ensure transparency in artificial intelligence (AI) systems.The report, titled “A CLeAR Documentation Framework for AI Transparency: Recommendations for Practitioners & Context for Policymakers,” outlines key recommendations for documenting the process of developing and deploying AI technologies. The framework is meant to help stakeholders understand how AI systems work and are developed, by ensuring that those building and deploying AI systems provide transparency into their datasets, models, and systems.

The recommendations focus on documenting an AI system’s purpose, capabilities, development process, data sources, potential biases, limitations or risks. The report argues this level of transparency is essential for building trust in AI and ensuring systems are developed and used responsibly. “As AI becomes more prevalent in our lives, it is critical that the public understands how these systems make decisions and recommendations that impact them,” said report co-author and former Shorenstein Center Fellow Kasia Chmielinski. “Our framework provides a roadmap for practitioners to openly share information about their AI work in a responsible manner.” This framework was developed by a team of experts that have been at the cutting edge of AI documentation across industry and academia. They hope the recommendations will help standardize transparency practices and inform the ongoing policy discussion around regulating AI.

This report introduces the CLeAR (Comparable, Legible, Actionable, and Robust) Documentation Framework to offer guiding principles for AI documentation. The framework is designed to help practitioners and others in the AI ecosystem consider the complexities and tradeoffs required when developing documentation for datasets, models, and AI systems (which contain one or more models, and often other software components). Documentation of these elements is crucial and serves several purposes, including: (1) Supporting responsible development and use, as well as mitigation of downstream harms, by providing transparency into the design, attributes, intended use, and shortcomings of datasets, models, and AI systems; (2) Motivating dataset, model, or AI system creators and curators to reflect on the choices they make; and (3) Facilitating dataset, model, and AI system evaluation and auditing. We assert that documentation should be mandatory in the creation, usage, and sale of datasets, models, and AI systems.

This framework was developed with the expertise and perspective of a team that has worked at the forefront of AI documentation across both industry and the research community. As the need for documentation in machine learning and AI becomes more apparent and the benefits more widely acknowledged, we hope it will serve as a guide for future AI documentation efforts as well as context and education for regulators’ efforts toward AI documentation requirements.

“…transparency is a foundational, extrinsic value—a means for other values to be realized. Applied to AI development, transparency can enhance accountability by making it clear who is responsible for which kinds of system behavior.”

Machine learning models are designed to identify patterns and make predictions based on statistical analysis of data. Modern artificial intelligence (AI) systems are often made up of one or more machine learning models combined with other components. While the statistical foundations of modern machine learning are decades old, technological developments in the last decade have led to both more widespread deployment of AI systems and an increasing awareness of the risks associated with them. The use of statistical analysis to make predictions is prone to replicate already existing biases and even introduce new ones, causing issues like racial bias in medical applications, gender bias in finance, and disability bias in public benefits. AI systems can also lead to other (intentional as well as unintentional) harms, including the production of misinformation and the sharing or leaking of private information.

With growing national and international attention to AI regulation, rights-based principles, data equity, and risk mitigation, this is a pivotal moment to think about the social impact of AI, including its risks and harms, and the implementation of accountability and governance more broadly. Most proposed AI regulation mandates some level of transparency, as transparency is crucial for addressing the ways in which AI systems impact people. This is because transparency is a foundational, extrinsic value—a means for other values to be realized. Applied to AI development, transparency can enhance accountability by making it clear who is responsible for which kinds of system behavior. This can lessen the amount of time it takes to stop harms from proliferating once they are identified, and provides legal recourse when people are harmed. Transparency guides developers towards non-discrimination in deployed systems, as it encourages testing for it and being transparent about evaluation results. Transparency enables reproducibility, as details provided can then be followed by others. This in turn incentivizes integrity and scientific rigor in claims made by AI developers and deployers and improves the reliability of systems. And transparency around how an AI system works can foster appropriate levels of trust from users and enhance human agency.

Transparency can be realized, in part, by providing information about how the data used to develop and evaluate the AI system was collected and processed, how AI models were built, trained, and fine-tuned, and how models and systems were evaluated and deployed. Towards this end, documentation has emerged as an essential component of AI transparency and a foundation for responsible AI development.

Although AI documentation may seem like a simple concept, determining what kinds of information should be documented and how this information should be conveyed is challenging in practice. Over the past decade, several concrete approaches, as well as evaluations of these approaches, have been proposed for documenting datasets , models , and AI systems. Together, this body of work supplies a rich set of lessons learned.

Based on these lessons, this paper introduces the CLeAR Documentation Framework, designed to help practitioners and policymakers understand what principles should guide the process and content of AI documentation and how to create such documentation…at a high level, the CLeAR Principles state that documentation should be:

A healthy documentation ecosystem requires the participation of both practitioners and policymakers. While both audiences benefit from understanding current approaches, principles, and tradeoffs, practitioners face more practical challenges of implementation, while policymakers need to establish structures for accountability that include and also extend beyond documentation, as documentation is necessary but not sufficient for accountability when it comes to complex technological systems. We offer guidance for both audiences.

Documentation, like most responsible AI practices, is an iterative process. We cannot expect a single approach to fit all use cases, and more work needs to be done to establish approaches that are effective in different contexts. We hope this document will provide a basis for this work.

For the full CLeAR Report, go to this link. For a Summary of the report, go to this link.

This cogent, concise summary of the future of AI regulation (taken from the Congressional Research Services report Artificial Intelligence: Overview, Recent Advances, and Considerations for the 118th Congress) is worth a read. It contextualizes transparency as one of “ten principles for the stewardship of AI applications…”:

As the use of AI technologies has grown, so too have discussions of whether and how to regulate them. Internationally, the European Union has proposed the Artificial Intelligence Act (AIA), which would create regulatory oversight for the development and use of a wide range of AI applications, with requirements varying by risk category, from banning systems with “unacceptable risk” to allowing free use of those with minimal or no risk. In one recently passed version of the AIA, the European Parliament agreed to changes that included a ban on the use of AI in biometric surveillance and a requirement for GenAI systems to disclose that the outputs are AI-generated. In the United States, legislation such as the Algorithmic Accountability Act of 2022 (H.R. 6850 and S. 3572, 117th Congress) would have directed the Federal Trade Commission to require certain organizations to perform impact assessments of automated decision systems—including those using ML and AI—and augmented critical decision processes. Some researchers have noted that these proposals “spring from widely different political contexts and legislative traditions.”

The United States has yet to enact legislation to broadly regulate AI, and the AIA now enters a trilogue negotiation among representatives of the three main institutions of the EU (Parliament, Commission, and Council). Depending on the final negotiated text of the AIA, the EU and United States might begin to align or diverge on AI regulation, as discussed by researchers. Rather than broad regulation of AI technologies that could be used across sectors, some stakeholders have suggested a more targeted approach, regulating the use of AI technologies in particular sectors. The federal government has taken steps over the past few years to evaluate AI regulation in this way. In November 2020, in response to Executive Order 13859, “Maintaining American Leadership in Artificial Intelligence,” OMB released a memorandum to the heads of federal agencies providing guidance for regulatory and nonregulatory oversight of AI applications developed and deployed outside of the federal government.

The memorandum laid out 10 principles for the stewardship of AI applications, including risk assessment, fairness and nondiscrimination, disclosure and transparency, and interagency coordination. It further touched on reducing barriers to the deployment and use of AI, including increasing access to government data, communicating benefits and risks to the public, engaging in the development and use of voluntary consensus standards, and engaging in international regulatory cooperation efforts. Additionally, the memorandum directed federal agencies to provide plans to conform to the guidance, including any statutory authorities governing agency regulation of AI applications, regulatory barriers to AI applications, and any planned or considered regulatory actions on AI. So far, few agencies appear to have provided comprehensive, publicly available responses.

Industry has echoed calls for regulation of AI technologies, with some companies putting forth recommendations. However, some analysts have argued that the push for regulations from technology firms is intended to protect companies’ interests and might not align with the priorities of other stakeholders. Further, some argue that large technology companies continue to prioritize the speed of AI technology deployment ahead of concerns about safety and accuracy. In March 2023, the U.S. Chamber of Commerce released a report calling for AI regulation, stating,

Policy leaders must undertake initiatives to develop thoughtful laws and rules for the development of responsible AI and its ethical deployment. A failure to regulate AI will harm the economy, potentially diminish individual rights, and constrain the development and introduction of beneficial technologies.

Our framing of AI Transparency is in the context of the future of trust, which is a broad research theme at OODA Loop, overlapping with topics like the future of money (ie. the creation of new value exchange mechanisms, value creation, and value storage systems – and the role trust will play in the design of these new monetary systems). Likewise, notions of trust (or lack thereof) will impact the future of Generative AI, AI governance (i.e., Trustworthy AI), and the future of autonomous systems and exponential technologies generally. In fact, two panels at the OODACon 2023 were concerned with the future of trust relationships and the design of trust into future systems. You can find links to the summaries of these panel discussions here:

Following are some of the sections of these panel summaries when the conversation turned to transparency – and pointed us to strategic framing which informed this post. Most notable is the highlighting of the fact that blockchain and web3 technologies also have transparency issues. In contrast, the conventional marketing/branding around blockchain and web3 is that they are intrinsically transparent, which is not necessarily the case:

Forming Relationships Through Videogames: A recent Deloitte survey was discussed which captured responses on various aspects of personal relationships and videogames. The survey highlighted:

Is Web3 Transparency a Marketing-driven Fallacy?: Transparency in web3 may be a fallacy despite marketing claims. As discussed, the Deloitte survey highlights security and trust issues, emphasizing that this technology is used by bad actors. The lack of a leadership mindset and education, along with regulatory arbitrage, contribute to the fallacy of transparency. Additionally, centralization can promote visibility, but the paradigm shift and lack of roadmap make it challenging to achieve true transparency. While the marketing may assert transparency in web3, there are various factors that challenge its actual realization.

Trustworthy AI: Trustworthy AI is a crucial aspect of the development and implementation of artificial intelligence systems. It involves factors such as credibility, erosion of trust, and the establishment of trust between humans and automated systems. Trustworthy AI aims to address concerns regarding transparency, reliability, and accountability in AI decision-making processes. However, there are challenges in measuring and ensuring trust in AI systems. Adversarial machine learning is an area of concern where adversaries exploit AI systems, highlighting the need for robust security measures. The future of trustworthy AI includes considerations of regulations, the impact on various industries like healthcare and insurance, and the need for responsible development and training of AI. Although the potential of AI is vast, there is cautious optimism and a wait-and-see attitude due to concerns about government regulations and the potential displacement of human decision-making. The development of communities and human relationships, as well as seeking out reliable sources of information, play a role in navigating the complexities of AI. Overall, the goal is to strike a balance between leveraging the capabilities of AI while ensuring its trustworthiness and accountability.

Working Definitions of Trust: Trust can be defined as a concept encompassing belief, confidence, and credibility. It involves relying on safe and reliable sources, individuals, and information. Trust is crucial for managing relationships and organizations, especially in the face of misinformation and deception. It is difficult for adversaries to attack trust, as it serves as a center of gravity for people’s will and cannot be easily compromised. Trust is also related to value similarity and the sense of belonging within a community. However, determining who deserves trust and who does not can be complex, involving factors such as clearance holders and credit ratings. Ultimately, trust involves looking beyond established norms and being aware of the illusion of closeness.

Incentives and motives: What is someone trying to achieve with this pattern of events?: Incentives and motives play crucial roles in trust. Blockchain technology has faced challenges due to misaligned economic incentives and regulatory oversight, but it continues to disrupt various sectors and offers new opportunities. Thus, understanding and aligning incentives and motives are essential in establishing and maintaining trust. Incentives should foster and motives should reflect a positive sense of belonging and community.

What role do Benefit systems play in trust? Benefit systems play a role in trust by contributing to the establishment of trust within organizations Encouraging risk-taking and fostering a culture of learning and innovation are essential for building trust. Benefit systems also play a role in managing trust within organizations amidst the challenges of misinformation and disinformation. Overall, benefit systems can support trust-building efforts by promoting transparency, accountability, and credibility in organizations.

The Illusion of Closeness: The concept of the “illusion of closeness” was discussed in the context of trust and technological disruption. Social media platforms are highlighted for creating an illusion of trust and the significant impact of misinformation. It is noted that conspiracy theories can serve as a “belonging signal”. Building trust involves diverse ideas, listening to people, and exploring insider trust. The impact of AI in various fields like healthcare and future business models is also mentioned. However, there is caution and a need for transparency and proper training in AI implementation. Overall, the illusion of closeness and the importance of trust in the age of technological disruption were highlighted in the discussion.

For more OODA Loop News Briefs and Original Analysis, see OODA Loop | Generative AI OODA Loop | LLM

On Trust and Zero Trust: New Paradigms of Trust, Designing Trust into Systems, and Trustworthy AI: A compilation of OODA Loop Original Analysis and OODAcast conversations concerned with trust, zero trust and trustworthy AI.

Moody’s on the “Recoding” of Entire Industries, Including The Financial Sector, by the Convergence of AI and the Blockchain: Amazon, Airbnb, and Uber are all examples of disintermediation (innovation that undermines established or incumbent structures of a market, organization, or industry sector). Moody’s went with the recent headline that the “Convergence of AI and blockchain could recode multiple industries.” The notion of the “recoding” of an industry sector is compelling – as it suggests a more nuanced, code-level transformation of an industry sector (as opposed to just the disruption that accompanies disintermediation). We explore the nuances of the Moody’s report.

Corporate Board Accountability for Cyber Risks: With a combination of market forces, regulatory changes, and strategic shifts, corporate boards and directors are now accountable for cyber risks in their firms. See: Corporate Directors and Risk

Geopolitical-Cyber Risk Nexus: The interconnectivity brought by the Internet has caused regional issues that affect global cyberspace. Now, every significant event has cyber implications, making it imperative for leaders to recognize and act upon the symbiosis between geopolitical and cyber risks. See The Cyber Threat

Ransomware’s Rapid Evolution: Ransomware technology and its associated criminal business models have seen significant advancements. This has culminated in a heightened threat level, resembling a pandemic’s reach and impact. Yet, there are strategies available for threat mitigation. See: Ransomware, and update.

Challenges in Cyber “Net Assessment”: While leaders have long tried to gauge both cyber risk and security, actionable metrics remain elusive. Current metrics mainly determine if a system can be compromised without guaranteeing its invulnerability. It’s imperative not just to develop action plans against risks but to contextualize the state of cybersecurity concerning cyber threats. Despite its importance, achieving a reliable net assessment is increasingly challenging due to the pervasive nature of modern technology. See: Cyber Threat

Decision Intelligence for Optimal Choices: Numerous disruptions complicate situational awareness and can inhibit effective decision-making. Every enterprise should evaluate its data collection methods, assessment, and decision-making processes for more insights: Decision Intelligence.

Proactive Mitigation of Cyber Threats: The relentless nature of cyber adversaries, whether they are criminals or nation-states, necessitates proactive measures. It’s crucial to remember that cybersecurity isn’t solely the IT department’s or the CISO’s responsibility – it’s a collective effort involving the entire leadership. Relying solely on governmental actions isn’t advised given its inconsistent approach towards aiding industries in risk reduction. See: Cyber Defenses

The Necessity of Continuous Vigilance in Cybersecurity: The consistent warnings from the FBI and CISA concerning cybersecurity signal potential large-scale threats. Cybersecurity demands 24/7 attention, even on holidays. Ensuring team endurance and preventing burnout by allocating rest periods are imperative. See: Continuous Vigilance

Embracing Corporate Intelligence and Scenario Planning in an Uncertain Age: Apart from traditional competitive challenges, businesses also confront unpredictable external threats. This environment amplifies the significance of Scenario Planning. It enables leaders to envision varied futures, thereby identifying potential risks and opportunities. Regardless of their size, all organizations should allocate time to refine their understanding of the current risk landscape and adapt their strategies. See: Scenario Planning