Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

Featured Image Source: OpenAI

In our December post, OODA Loop 2022: The Past, Present, and Future of ChatGPT, GPT-3, OpenAI, NLMs, and NLP, we focused on ChatGPT in the context of OODA Loop research and analysis over the course of the year on AI, machine learning, GPT-3, neural language models (NLM), and natural language processing (NLP) – posts from OODA CEO Matt Devost (where he weighed into the conversation on ChatGPT with his review of the potential disruptive performance of the latest version of the OpenAI GPT-3 platform) and OODA CTO Bob Gourley (on the most recent GPT-3 development in the context of NLP’s growth over the years and the inflection point it all represents as ChatGPT mainstream the technology). Bob followed up in early January with his perspective on Using OpenAI’s GPT To Produce Intelligence Reports At UnrestrictedIntelligence.com.

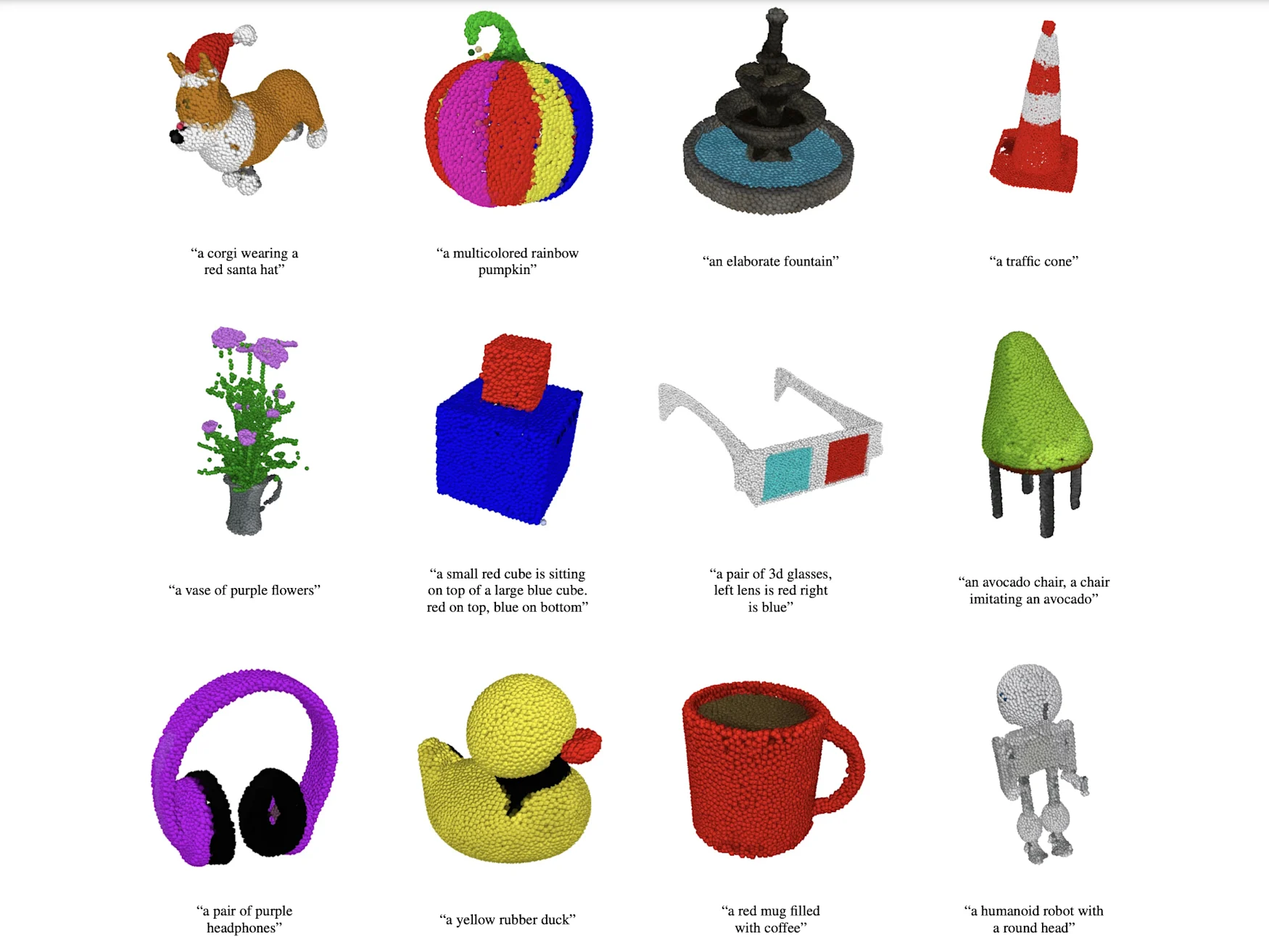

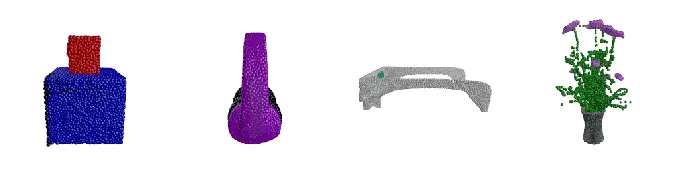

In the midst of the ChatGPT news frenzy, OpenAI released POINT-E, which extends the implications everyone is trying to sort out about the capabilities of ChatGPT into the 3-D realm and which may act as an accelerant in the production of the next generation 3-D interface of the global network, commonly known as the metaverse.

Giovanni Castillo, a Senior Metaverse Designer at Aquent, commented in a recent LinkedIn post:

“Alright we don’t have to 3d model tedious long hours anymore! Looks like OpenAI is releasing point E to do text to 3d generation. My first reaction was a sense of relief. 3d modeling should be easier and quicker. Well this may be a solution? #generativeAI #dall-e #aidesign #neoluddites swarming!” He included a link to Endgadet’s coverage of the OpenAI release of Point-E:

“OpenAI, the Elon Musk-founded artificial intelligence startup behind popular DALL-E text-to-image generator, announced on Tuesday [December 17, 2022] the release of its newest picture-making machine POINT-E, which can produce 3D point clouds directly from text prompts. Whereas existing systems like Google’s DreamFusion typically require multiple hours — and GPUs — to generate their images, Point-E only needs one GPU and a minute or two.

Image source: OpenAI

3D modeling is used across a variety of industries and applications. The CGI effects of modern movie blockbusters, video games, VR and AR, NASA’s moon crater mapping missions, Google’s heritage site preservation projects, and Meta’s vision for the Metaverse all hinge on 3D modeling capabilities. However, creating photorealistic 3D images is still a resource and time-consuming process, despite NVIDIA’s work to automate object generation and Epic Game’s RealityCapture mobile app, which allows anyone with an iOS phone to scan real-world objects as 3D images.

Text-to-Image systems like OpenAI’s DALL-E 2 and Craiyon, DeepAI, Prisma Lab’s Lensa, or HuggingFace’s Stable Diffusion, have rapidly gained popularity, notoriety, and infamy in recent years. Text-to-3D is an offshoot of that research. Point-E, unlike similar systems, “leverages a large corpus of (text, image) pairs, allowing it to follow diverse and complex prompts, while our image-to-3D model is trained on a smaller dataset of (image, 3D) pairs,” the OpenAI research team led by Alex Nichol wrote in Point·E: A System for Generating 3D Point Clouds from Complex Prompts, published [on December 16, 2022]. ‘To produce a 3D object from a text prompt, we first sample an image using the text-to-image model, and then sample a 3D object conditioned on the sampled image. Both of these steps can be performed in a number of seconds, and do not require expensive optimization procedures.’

Image source: OpenAI

If you were to input a text prompt, say, ‘A cat eating a burrito,’ Point-E will first generate a synthetic view 3D rendering of said burrito-eating cat. It will then run that generated image through a series of diffusion models to create the 3D, RGB point cloud of the initial image — first producing a coarse 1,024-point cloud model, then a finer 4,096-point. ‘In practice, we assume that the image contains the relevant information from the text, and do not explicitly condition the point clouds on the text,’ the research team points out.

These diffusion models were each trained on ‘millions’ of 3d models, all converted into a standardized format. ‘While our method performs worse on this evaluation than state-of-the-art techniques,’ the team concedes, ‘it produces samples in a small fraction of the time.’ If you’d like to try it out for yourself, OpenAI has posted the project’s open-source code on Github. (1)

Recent OODA Loop News Briefs on ChatGPT

https://oodaloop.com/technology/2023/01/09/cybercriminals-using-chatgpt-to-build-hacking-tools-write-code/

https://oodaloop.com/briefs/2023/01/02/experts-warn-chatgpt-could-democratize-cybercrime/

https://oodaloop.com/archive/2023/01/04/using-openais-gpt-to-produce-intelligence-reports-at-unrestrictedintelligence-com/

https://oodaloop.com/ooda-original/disruptive-technology/2022/12/22/ooda-loop-2022-the-past-present-and-future-of-chatgpt-gpt-3-openai-nlms-and-nlp/

https://oodaloop.com/archive/2022/12/12/the-great-gpt-leap-is-disruption-in-plain-sight/

https://oodaloop.com/archive/2022/12/05/we-are-witnessing-another-inflection-point-in-how-computers-support-humanity/