Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

Featured Image Source: Comic Book Resources

Extremists co-opt Minecraft, Call of Duty and more to spread messages of hate. https://t.co/VZqU6Qu6ra pic.twitter.com/lcUn7HBibb

— CBR (@CBR) September 23, 2021

In yet another example of a successful public/private policy research collaboration and in a continuation of the topic discussed by Brian Jenkins at the OODA Network November Monthly Meeting – domestic political extremism – we turn to a report released in July of this year: Breaking the Building Blocks of Hate: A Case Study of Minecraft Servers, which found that “one-in-four moderation actions across three private servers of the popular video game Minecraft are in response to online hate and harassment.” (1)

The public and private sector organizations and the individual contributors that collaborated on this report are of note:

Anti-Defamation League (ADL): ADL is the leading anti-hate organization in the world. Founded in 1913, its timeless mission is “to stop the defamation of the Jewish people and to secure justice and fair treatment to all.” Today, ADL continues to fight all forms of antisemitism and bias, using innovation and partnerships to drive impact. A global leader in combating antisemitism, countering extremism, and battling bigotry wherever and whenever it happens, ADL works to protect democracy and ensure a just and inclusive society for all. Austin Botelho works as a machine learning engineer.

Anti-Defamation League (ADL) Center for Technology and Society (CTS): The ADL Center for Technology & Society leads the global fight against online hate and harassment. In a world riddled with antisemitism, bigotry, extremism, and disinformation, we act as fierce advocates for making digital spaces safe, respectful, and equitable for all people. Building on ADL’s century of experience building a world without hate, the Center for Technology and Society (CTS) serves as a resource to tech platforms. It develops proactive solutions to fight hate both online and offline. CTS works at the intersection of technology and civil rights through education, research, and advocacy.

Middlebury Institute of International Studies is a graduate school of Middlebury College, based in Monterey, California. Alex Newhouse is the deputy director of its Center for Extremism and Counterterrorism.

Take This is a 501(c)(3) mental health non-profit that destigmatizes mental health challenges and provides mental health resources and support for the gaming industry and gaming communities. Dr. Rachel Kowert serves as its director of research.

GamerSafer’s vision is to scale online safety, and positive and fair play experiences to millions of players worldwide. Using computer vision and artificial intelligence, its identity management solution helps multiplayer games, esports platforms, and online communities defeat fraud, crimes, and disruptive behaviors.

The online game Minecraft, owned by Microsoft, has amassed 141 million active users since it was launched in 2011. It is used in school communities, among friend groups, and even has been employed by the U.N. Despite its ubiquity as an online space, little has been reported on how hate and harassment manifest in Minecraft, as well as how it performs content moderation. To fill this research gap, Take This, ADL and the Middlebury Institute of International Studies, in collaboration with GamerSafer, analyzed hate and harassment in Minecraft based on anonymized data from January 1st to March 30th, 2022 consensually provided from three private Minecraft servers (no other data was gathered from the servers except the anonymized chat and report logs used in this study). While this analysis is not representative of how all Minecraft spaces function, it is a crucial step in understanding how important online gaming spaces operate, the form that hate takes in these spaces, and whether content moderation can mitigate hate.

To gain a better understanding of hate speech within gaming cultures, ADL partnered with Take This, the Middlebury Institute of International Studies, and GamerSafer to examine speech patterns in public and private chat logs from private Minecraft Java servers. The decentralized, player-run nature of Minecraft Java edition provides a novel opportunity to assess hate and harassment in gaming spaces.

After examining chats and user reports from data provided by GamerSafer, [the researchers] found:

We hope this report provides a benchmark on the scope and form that hate takes in the online games millions play every day.

Minecraft is a popular sandbox-style game developed by Mojang Studios in 2011 and was subsequently acquired by Microsoft in 2014. Players can create and break apart various kinds of blocks in three-dimensional worlds. Minecraft is often framed as a “sandbox” because, like a traditional playground sandbox, only a player’s imagination limits what they can do. There are no real goals or objectives except the ones that players set for themselves.

There are two versions of Minecraft: the Bedrock and Java editions. The Java edition primarily allows players to independently host and privately run game servers with no oversight from Microsoft whereas Bedrock is primarily hosted by Mojang, which partners closely with the servers’ users on content moderation. In June 2022, Minecraft announced it was enabling players of the Java edition to report violative content to the central Minecraft team at Mojang Studios. Simultaneously, Mojang confirmed that this effort would only focus on what players report and exclude any proactive monitoring or moderation.

The size and scope of these servers can vary greatly. For example, an individual can host a small server open to a handful of people living in a particular neighborhood to be used as a creative after-school space. A server could be used by an entire classroom or a school to explore group learning in a digital space. It may also be a server hosted by an organization such as the United Nations to provide young people with an interactive experience in urban planning. Since server maps are based on user-generated content, players can also spread hateful concepts and congregate with like-minded people. Private Minecraft servers have been found to host the creation and re-enactment of Holocaust concentration camps. Until recently, the administrators of Java servers (such as educators setting up a Minecraft server for their students) enacted all content moderation decisions for that server. With the recent advent of player reporting, the Minecraft team at Microsoft will also play a role in content moderation, though what that role is remains to be seen.

[The researchers] analyzed public and private chat data provided by GamerSafer from three Minecraft Java servers to measure the levels of hateful speech in gaming spaces. The three servers varied in size and moderation capacity.

Server 1 housed a large (roughly 20,000 players), primarily adolescent audience (14-18 years old), dozens of server staff, and upheld very strict rule enforcement. The player-to-moderator ratio was around 465:1. Competitive player versus player (PvP) play was optional on this server. Server 1 was the most active of the three servers and constituted 94% of the analyzed data.

Server 2 had a smaller (almost 1,000 players), slightly older audience (averaging 15-20 years old) and only two staff members that implemented minimal to no rule enforcement. The player-to-moderator ratio was around 500:1. On this server, competitive PvP was encouraged.

Server 3 was the smallest of the three servers, housing around 400 players. The player-to-moderator ratio was by far the smallest around 41:1. This server was primarily an audience of older adolescents and adults (aged 16+) with a moderation team of 10 staff members. Gameplay was largely collaborative, with PvP limited to small, designated areas. Of all the servers, Server 3 had the most extensive guidelines and active moderation team but staff had trouble enforcing the rules.

GamerSafer offers a suite of safety tech solutions to gaming companies, including a special plugin for Minecraft, supporting server staff and moderators to manage infractions and in-game reports. Hate speech is one of 37 ban categories in the Minecraft plugin. Tracking incidents of reported behavior among game players across servers, the report logs contain 458 total disciplinary actions against 374 unique users.

Analysis was two-fold. First, we examined the formal reports made to moderators and moderator actions in regards to any form of hateful or harassing behavior. This was done to better understand the nature of the servers and broad patterns of behavior. Secondly, we conducted a textual analysis on the in-game chat to examine speech patterns as well as identify hateful or harassing actions that may not have been logged by the moderators.

Many in-game offenders are repeat offenders. Almost a fifth of users (64) had multiple actions taken against them during data collection. Of the actioned users, 90 were sentenced to a permanent ban. 21 (23%) of permanently banned users received prior actions (temporary bans, mutes, and warnings). Server 1 contained 93.9% of these reports.

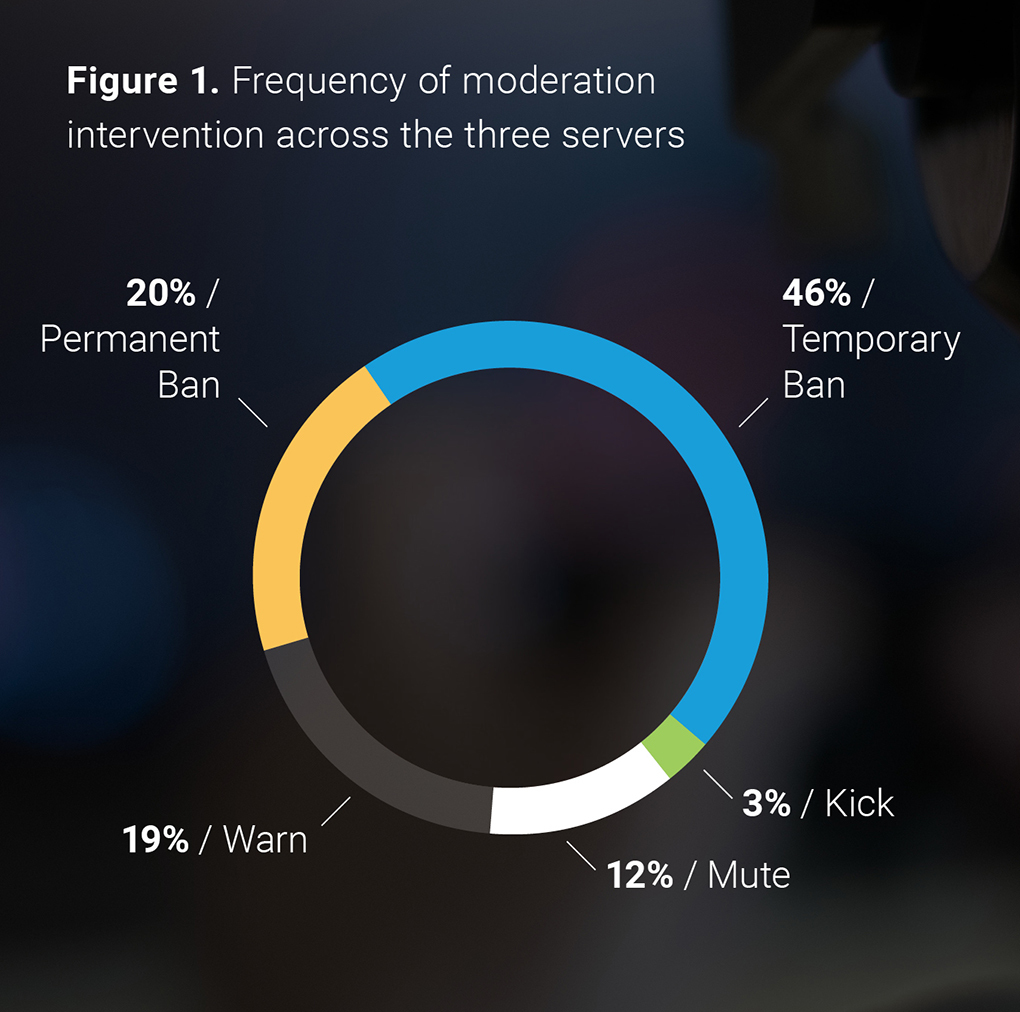

Moderators have the ability to ban players. Two-thirds of the actions taken by moderators were bans. As seen in Figure 1, a temporary ban from the server was the primary action (46%), followed by a permanent ban (20%), and a verbal warning (19%). We do not know with certainty how closely bans were enacted following a violation.

[Figure 1. Frequency of moderation intervention across the three servers.]

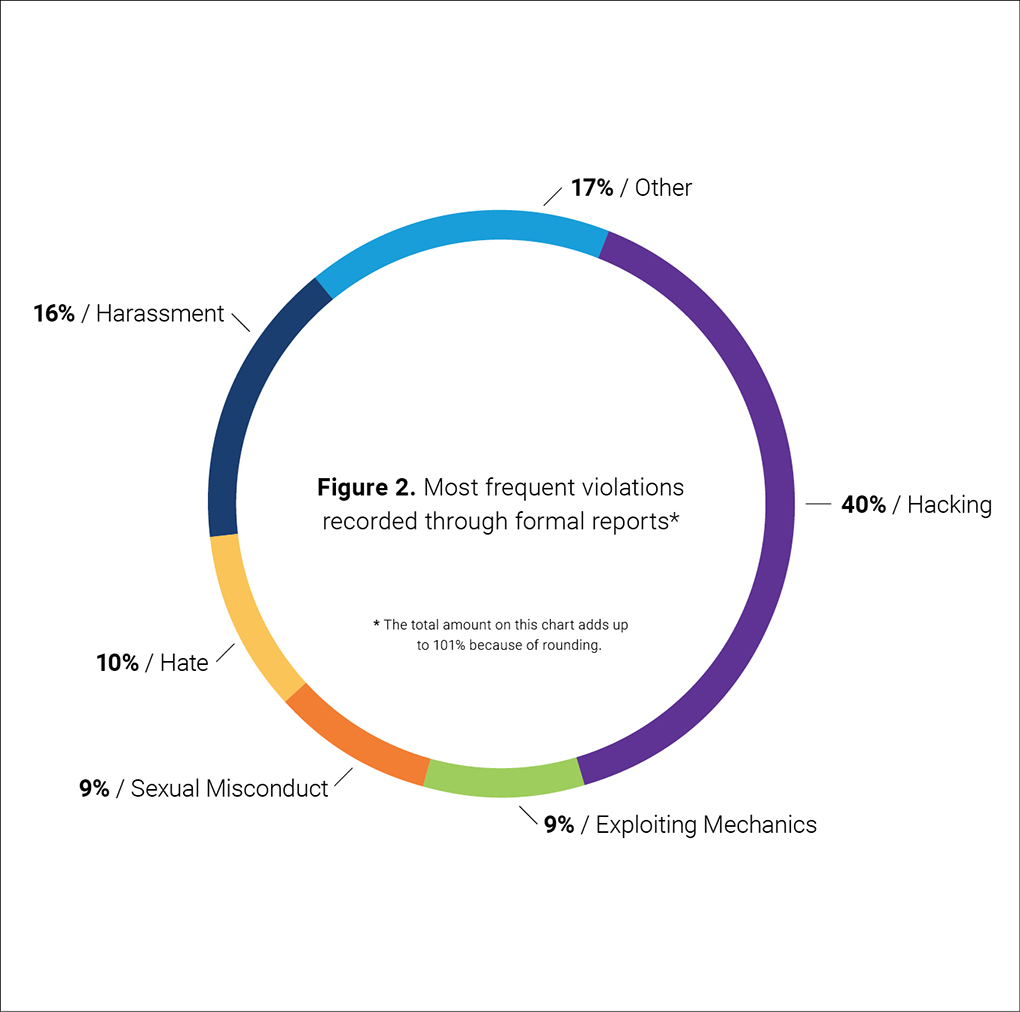

Figure 2 shows the most common reported violations reported were hacking, or trying to create an advantage beyond normal gameplay (40% of all reports); harassment, including hostility, spamming, bullying, trolling, and threats (16% of all reports); hate, or attacks rooted in prejudice towards someone’s race or ethnicity, gender, sexual orientation, and other forms of identity (10% of all reports); sexual misconduct in the form of inappropriate comments (9% of all reports); and exploiting mechanics, or using a bug or glitch in a game for an advantage (9% of all reports).

[Figure 2. Most frequent violations recorded through formal reports.]

The authors of the report made the following recommendations, some of which are specific to the gaming industry but we found applied agnostically to many of the challenges in addressing domestic political extremism on a variety of online platforms:

“Working with real-time chat data, this deep dive into Minecraft Java servers provided insight into hate and harassment within games. Drawing from our findings, we offer directions for future work within this space.

(1): Hate and Harassment Drive One-in-Four Moderator Actions on Minecraft Servers, Study Finds

Also: Breaking the Building Blocks of Hate: A Case Study of Minecraft Servers

The full report can be found at Breaking the Building Blocks of Hate: A Case Study of Minecraft ServersPDF of Minecraft Report

It should go without saying that tracking threats are critical to inform your actions. This includes reading our OODA Daily Pulse, which will give you insights into the nature of the threat and risks to business operations.

Use OODA Loop to improve your decision-making in any competitive endeavor. Explore OODA Loop

The greatest determinant of your success will be the quality of your decisions. We examine frameworks for understanding and reducing risk while enabling opportunities. Topics include Black Swans, Gray Rhinos, Foresight, Strategy, Strategies, Business Intelligence, and Intelligent Enterprises. Leadership in the modern age is also a key topic in this domain. Explore Decision Intelligence

We track the rapidly changing world of technology with a focus on what leaders need to know to improve decision-making. The future of tech is being created now and we provide insights that enable optimized action based on the future of tech. We provide deep insights into Artificial Intelligence, Machine Learning, Cloud Computing, Quantum Computing, Security Technology, and Space Technology. Explore Disruptive/Exponential Tech

Security and resiliency topics include geopolitical and cyber risk, cyber conflict, cyber diplomacy, cybersecurity, nation-state conflict, non-nation state conflict, global health, international crime, supply chain, and terrorism. Explore Security and Resiliency

The OODA community includes a broad group of decision-makers, analysts, entrepreneurs, government leaders, and tech creators. Interact with and learn from your peers via online monthly meetings, OODA Salons, the OODAcast, in-person conferences, and an online forum. For the most sensitive discussions interact with executive leaders via a closed Wickr channel. The community also has access to a member-only video library. Explore The OODA Community.