Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

We are entering into the Era of Code. Code that writes code and code that breaks code. Code that talks to us and code that talks for us. Code that predicts and code that decides. Code that rewrites us. Organizations and individuals that prioritize understanding how the Code Era impacts them will develop increasing advantage in the future.

At OODAcon 2023, we will be taking a closer look at Generative AI innovation and the impact it is having on business, society, and international politics. IQT and the Special Competitive Studies Project (SCSP) recently weighed in on this Generative AI “spark” of innovation that will “enhance all elements of our innovation power” – and the potential cybersecurity conflagarations that may also be lit by that same spark. Details here.

At OODAcon 2022, we predicted that ChatGPT would take the business world by storm and included an interview with OpenAI Board Member and former Congressman Will Hurd. Today, thousands of businesses are being disrupted or displaced by generative AI. An upcoming session at OODAcon 2023 will take a closer look at this innovation and the impact it is having on business, society, and international politics.

The following resources are an OODAcon 2023 pre-read from two highly respected outlets on generative AI, its impact on innovation, the startup ecosystem, cybersecurity risks and national security strategy – including Generative AI as a “unique opportunity to lead with conviction as humanity enters a new era…informed by the generative AI models that enhance all elements of our innovation power.”

The OODA Almanac series is intended to be a quirky forecasting of themes that the OODA Network think will be emergent each year. You can review our 2022 Almanac and 2021 Almanac which have both held up well. The theme for last year was exponential disruption, which was carried through into our annual OODAcon event. This year’s theme is “jagged transitions” which is meant to invoke the challenges inherent in the adoption of disruptive technologies while still entrenched in low-entropy old systems and in the face of systemic global community threats and the risks of personal displacement.

“Are their deterrents and defenses we can put in place to prevent adversarial code from being impactful or effective?…we will likely need innovative approaches to defend against code with code. What is a responsible pathway towards code autonomy?”

We are entering into the Era of Code. Code that writes code and code that breaks code. Code that talks to us and code that talks for us. Code that predicts and code that decides. Code that rewrites us. Organizations and individuals that prioritize understanding how the Code Era impacts them will develop increasing advantage in the future.

If a tool like ChatGPT is available to all, the advantage goes to those who know how to derive the most value from the tool. Organizations and societies that look to utilize these tools rather than ban them will maximize this advantage. Instead of banning ChatGPT in schools, we should be teaching students how to use the capability to better advance their knowledge, ability to learn, and communicate ideas.

We will also rapidly enter into an age of regulatory arbitrage around the acceptable use of new technologies. For example, there are existing governors on how a tool like ChatGPT can be used that attempt to diminish its effectiveness as an offensive tool or in the creation of misinformation or other hostile political objectives. It is foolish to think that these constraints will be built into AI systems developed by adversaries and competitors. The same can be said for the boundaries around the coding of human DNA in developments like CRISPR. The boundaries of re-coding humans will continue to be pushed as the technology becomes more accessible globally.

What does the concept of Code Defense look like in such an environment? Are their deterrents and defenses we can put in place to prevent adversarial code from being impactful or effective? With the rapid pace of development and machine-speed OODA Loops, we will likely need innovative approaches to defend against code with code. What is a responsible pathway towards code autonomy?

From IQT:

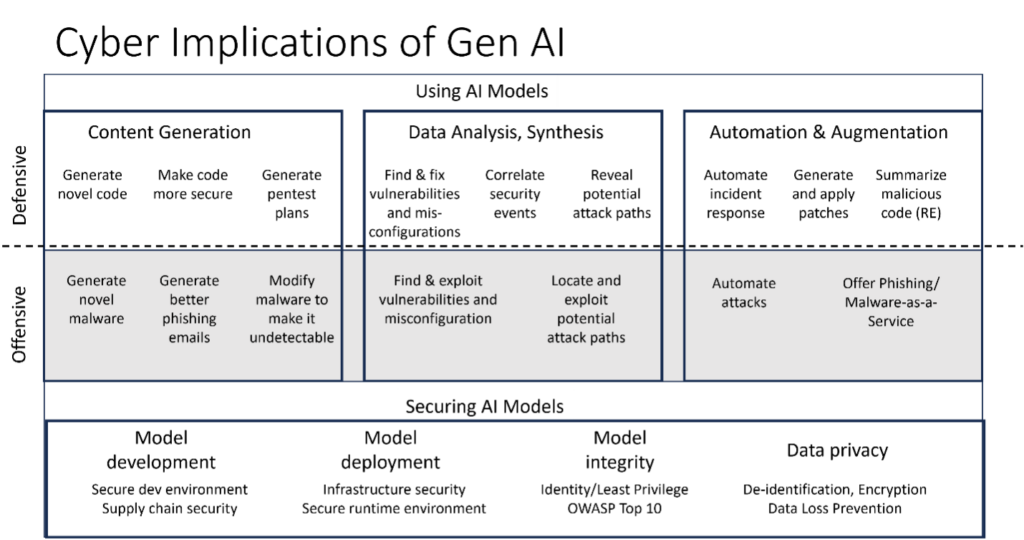

“As its name suggests, Generative AI (GenAI) is great at generating all manner of stuff, from silly Jay-Z verses to convincing news summaries. What makes it so powerful is its ability to write things, whether those things are emails or computer code. This is one of the three main domains of GenAI’s impact on cybersecurity that we highlighted in our introduction to this blog series…In this post, we’ll discuss why this ability to write natural language and code is both a big headache for—and, potentially, a great help to—cyber defenders.”

Summary of the Report:

While attackers are undoubtedly exploiting these new tools, developers, security teams, and an ecosystem of business stalwarts and startups are mobilizing to tap GenAI tools to bolster cyber defenses.

Although GenAI has undoubtedly given malware-makers a powerful new tool to use, on the flipside it could also frustrate hackers’ efforts by helping developers create code that’s more secure. Offerings from the likes of GitHub Copilot, Codeium, Replit, TabNine, and others use AI to autocomplete code and refactor segments of code in ways that are intended to reflect security best practices.

While these code-generation tools are still in their infancy, nearly four out of every five developers already believe they have improved their organization’s code security, according to a report from Synk. Driving this result are features such as AI-based vulnerability-filtering to block insecure coding patterns in real time—including hard-coding credentials, path injections, and SQL injections—and fine-tuning models (or even training them from scratch) on security-hardened proprietary databases.

All this code-related effort matters more than ever because GenAI promises to make the challenge of managing “big code” even harder. While the technology can help us build more secure software it’s also going to unleash a tsunami of new code by making it even easier to create programs, some of which will be solely generated by AI tools themselves. Managing larger codebases with their associated complexity will complicate cyber defenders’ task. Dealing with a GenAI-created wave of phishing emails and other content will also be a significant challenge in a world where AI agents and personal assistants are plentiful, making it even harder to distinguish suspect AI-generated messages from legitimate ones created by trusted AI agents.

Also form IQT:

“You couldn’t miss the theme of Generative Artificial Intelligence (GenAI) at the Black Hat cybersecurity conference which took place in Las Vegas back in August. The conference’s opening keynote focused on the promise and perils of this emerging technology, likening it to the first release of the iPhone, which was also insecure and riddled with critical bugs. Talk about the risks associated with this technology isn’t new: GenAI and Large Language Models (LLMs) have been around since 2017. What is new, however, is the introduction of ChatGPT in November 2022, which generated widespread attention and has really spurred the security industry to focus much more on the implications of GenAI for cybersecurity. [In this post IQT explores] some of those implications, assess how the double-edged digital sword of GenAI could shift the playing field in favor of both defenders and attackers depending on the circumstances, and highlight where startup companies are leading the way in harnessing the technology.”

Summary of the Report:

There are several reasons GenAI is causing concern in cyber circles. First, it offers a new set of capabilities over and above previous AI technology, which was primarily used to make decisions or predictions based on analysis of data inputs. Thanks to advances in computing and algorithms, GenAI can now be applied to a new category of tasks in cyber: developing code, responding to incidents, and summarizing threats.

These issues are accentuated by the fact that the technology is evolving fast. AI copilots, which learn from users’ behavior and offer guidance and assistance with tasks, are one example of this change. Large companies such as Microsoft with Security Co-Pilot and CrowdStrike with Charlotte AI are making headlines with tools to automate incident response and quickly summarize key endpoint threats using the technology. Startups, too, are integrating GenAI into their offerings and entrepreneurs are launching new companies to leverage GenAI in the cyber domain.

From the preface to the report from Chair and CEO of the SCSP, Eric Schmidt and Yili Bajraktari, respectively:

“Competition is SCSP’s organizing principle. Strategic competition between the United States and the People’s Republic of China (PRC), amidst the current wave of technological innovation, is the defining feature of world politics today. The United States must continue to out-innovate competitors and lead the development of future technologies. The geopolitical, technological, and ideological implications of this competition cannot be addressed in isolation – they must be managed together in a comprehensive competitive approach. To that end, we seek to help develop a new public-private model that is better suited to the geopolitical uncertainties of this competitive era, one in which technology will ultimately determine who leads and who follows.

To meet this moment, SCSP launched a focused effort to assess what the rise of GenAI means for the United States, our allies and partners, and the competition with strategic rivals. Bringing together leading experts in this fast-moving field, the SCSP Generative AI Task Force developed a range of recommendations – incorporated into this report – for the U.S. government, allies and partners, industry, and academia to address the challenges and opportunities that GenAI presents for national competitiveness. These included ways to foster responsible innovation and harness the transformative power of GenAI, while addressing ethical concerns and potential risks.

The GenAI moment demands similar action and a comparable sense of urgency and consequence. It is incumbent upon us to ensure that the ongoing AI revolution aligns with our democratic values and promotes global freedoms, given the profound implications of this technology for our national security and society. The journey of AI – particularly GenAI – is far from over; we are only just beginning to understand the ripple effects of this transformative era. But with careful navigation and proactive strategies, we can steer this Age of AI towards a more secure and prosperous future.”

Part I of this report explores the geopolitical implications of recent breakthroughs in GenAI. It presents the national security context, technological trajectory, and potential impacts of this disruptive new technology to inform effective, coordinated U.S. government actions.

Part II of this report includes a series of memorandums to senior U.S. government officials on the near-term implications of GenAI specific to their domains, along with policy recommendations for adapting to rapidly changing conditions.

Recommendations from the SCSP report:

The competition for power and influence in this era will require that the United States, alongside allies and partners, develop government-supported, public-private partnerships that enable continuous technology innovation and deployment. This moment provides the United States government with a unique opportunity to lead with conviction as humanity enters a new era.

Two Principles Should Guide U.S. Action

Two Objectives Will Define Success

Three Moves Are Required to Achieve Our Objectives

The Origins Story and the Future Now of Generative AI: The fast moving impacts and exponential capabilities of generative artificial intelligence over the course of just one year.

Generative AI – Socio-Technological Risks, Potential Impacts, Market Dynamics, and Cybersecurity Implications: The risks, potential positive and negative impacts, market dynamics, and security implications of generative AI have have emerged – slowly, then rapidly, as the unprecedented hype cycle around artificial intelligence settled into a more pragmatic stoicism – with project deployments – over the course of 2023.

Using AI for Competitive Advantage in Business: OODA principles and analysts have years of real world practitioner experience in fielding AI capabilities including the foundational infrastructure required for successful AI. We capture lessons learned and best practices in our C-Suite guide to AI for competitive advantage. Explore Using AI for Competitive Advantage.

Data As A Weapon System: Are you making the most of your data holdings? If you want to leverage your data to the better of your business you need to treat it like a weapon and use it to gain advantage over others. This is not just about your technical architecture, this is about your attitude and the approach you take to being proactive. For our best practices on how to do so see the OODA Guide to Using Your Data As A Weapon.