Start your day with intelligence. Get The OODA Daily Pulse.

Start your day with intelligence. Get The OODA Daily Pulse.

National Security policy and cybersecurity innovation at the physical layer – to solve some of the vexing security issues and as a strategic opportunity for technological advantage and American competitiveness – is starting to show an interesting sensemaking pattern across a variety of industry sectors and think tank sources. Take a look here.

The U.S. should allow Nvidia’s artificial intelligence (AI) chips only to buyers who agree to ethically use the technology, Google DeepMind’s co-founder Mustafa Suleyman told the Financial Times on Friday. The US should enforce minimum global standards for the use of AI, and companies should at a minimum agree to abide by the same pledge made by leading AI firms to the White House, Suleyman said.

In July, AI companies including OpenAI, Alphabet and Meta Platforms made voluntary commitments to the White House to implement measures such as watermarking AI-generated content to help make the technology safer. “The US should mandate that any consumer of Nvidia chips signs up to at least the voluntary commitments — and more likely, more than that,” Suleyman said. The U.S. has expanded restriction of exports of sophisticated Nvidia and Advanced Micro Devices artificial-intelligence chips beyond China to other regions including some countries in the Middle East.

Mustafa Suleyman is also the chief executive of Inflection AI, a Microsoft-backed AI startup that raised $1.3 billion in June from Nvidia and other firms. In May, Inflection released an AI chatbot named Pi that uses generative AI technology to interact with users through conversations, in which people can ask questions and share interests. Executives and experts have been calling on AI developers to work with policymakers on governance and regulatory authorities.

SCSP Senior Fellow Rick Switzer is on a one-year sabbatical from the Department of State and is currently working with the Special Competitive Studies Project (SCSP). Prior to joining SCSP, Rick was a State Department visiting professor at the National Intelligence University teaching graduate courses on China’s economy and innovation system. Rick also served as a member of the Secretary of State’s Policy Planning Council. From 2018 to 2019 he was a Senior State Department Advisor to the Department of Defense working with the Air Force and the Army. Preceding that he was the Environment, Science, Technology and Health Minister Counselor at Embassy Beijing, the largest science section in the world. Prior to joining government Rick co-founded a wireless technology start-up and also conducted innovation policy research at the University of California.

In February, Switzer penned The Next Pandemic Could Be Digital: Open Source Hardware and New Vectors of National Cybersecurity Risk. Following is his perspective on the challenges ahead at the hardware level:

Executive Summary

Open source technology is a powerful economic phenomenon that has unlocked economic value, but it also presents significant, yet poorly understood, challenges to national and economic security. Media and policy attention to date has focused on open source software: GitHub, for example, is an open source database where code is posted for all to use. Today, however, open source principles are being applied to computer hardware via initiatives like RISC-V (microprocessors); the Open Compute Project, or OCP (servers); and Open Radio Access Networks, or ORAN (networking equipment). If the current trajectory continues, it could lead to the commodification of the entire hardware stack, from microprocessors to server racks to routers, displacing today’s industry leaders – the majority of which are headquartered in the United States or friendly nations.

With the rapid growth of the Internet of Things (IoT) and its application to critical infrastructure, widespread adoption of open source hardware (OSHW) and related standards risks creating new attack vectors for nation-state actors. ‘Hardware trojans’ allow cyber vulnerabilities to be inserted directly onto a chip or into a router. Even when vulnerabilities can be detected, the commodification of OSHW makes it increasingly difficult to trace a vulnerability back to its source. As the world’s leading producer of lagging-edge microelectronics and ‘white box’ electronics. (1)

At present, the United States is not organized to prevent how the OSHW revolution could accelerate cyberattacks emanating from the hardware layer. OSHW is poorly understood outside technology circles, including within the U.S. Government, which lacks a coherent set of policies to address the national security challenges associated with OSHW. (2) To defuse this threat, the United States must take rapid action. Sourcing from trusted suppliers – which I argue means restricting the use of core digital infrastructure components manufactured in countries of concern – is a key step to ensure that chips, circuit boards, and other hardware are not corrupted by malign actors.

To implement this strategy, U.S. authorities should:

What began as a disruption of service from a series of regional banks has now expanded across the majority of the nation’s cloud and internet router infrastructure, leading to severely limited internet access and an almost complete cessation of digital financial transactions. The original outages were initially traced to a service provider of cloud-based data storage and backend processing for regional banking and financial services firms, but

outages now appear to be more widespread, covering close to 40 percent of all cloud-based data storage and processing services across the country. Just over a week after the crisis began, there are increasing reports of protests and looting of grocery stores where consumers encounter difficulties purchasing food due to the stores’ inability to process non-cash transactions. Banks are similarly inundated with customers demanding to withdraw cash from their accounts to pay for daily necessities. Multiple agencies and private sector actors have scoured the affected data centers for the cause with most focused on cyber intrusion and offensive

software-based cyberattacks.

Eventually the Defense Microelectronics Activity discovered the issue, identifying a hardwired, hardware-based on-off switch embedded in a series of voltage regulating chips that caused a chokepoint, preventing logic chips from connecting to the storage chips in the servers. Tracking the exact source of the affected chips has proven difficult, as virtually all of the affected hardware are commodity microelectronics components based upon open hardware standards that are sourced from the cheapest available supplier; however, virtually all are from hundreds of factories dispersed across China. Initial estimates show it will take 12-18 months to identify, remove, and replace all the corrupted hardware. However, as the only source for these commodity components are the same factories where they were originally procured, it is unclear if this strategy is feasible. The total economic cost of the crisis is expected to exceed trillions of dollars in damages and lost GDP, putting tens of millions of Americans out of work, and leading to unprecedented social unrest.

For Switzer’s report in entirety, go to this link.

Notes:

(1) the People’s Republic of China (PRC) is best positioned to capitalize on these factors. Beijing has embraced OSHW as a disruptive offset, and PRC law requires firms to comply with

the directives of the party-state.

(2) The PRC has both the means and the motive to weaponize the OSHW revolution.

“While the United States is currently the leader in generative artificial intelligence (GenAI), there is no guarantee that we will remain in the lead without a focused national effort. Our primary rival for AI leadership, the People’s Republic of China (PRC), understands the strategic and economic significance of AI and has a track record of executing well-resourced technology strategies that have produced results.1 Nations that harness the potential of GenAI will increase productivity, a key economic measure that underpins economic growth, higher standards of living, and the ability to finance all other national priorities. To unlock these benefits, the United States should bolster competitiveness across the fundamental building blocks that underpin AI leadership.

Compute: The United States should assure access to compute resources via cloud providers, encourage sustainable AI techniques, protect U.S. advantages in microelectronics, and lead in accelerating hardware innovation.

Data: The United States should develop a whole-of-nation strategy for digital infrastructure and create a trusted regulatory framework that harnesses data for social and economic benefit while paving the way to increased digital trade with allies and partners.

People: The United States should pursue immigration reforms to continue to attract top AI talent, establish a National Commission on Automation and the Future of Work, and develop curriculum guidelines to safely deploy GenAI in the classroom.

This memo outlines 1) the economic implications of GenAI and 2) steps the United States can take, along with allies and partners, to capture its full potential and sustain democratic leadership in this future-shaping technology.”

For the purposes of this post, we are focusing on the “Compute” implications as set out by this SCSP report, specifically: what is required to build an economic ecosystem in which the upside of generative AI will take hold and foster innovation and growth? Hardware level innovation figures prominently:

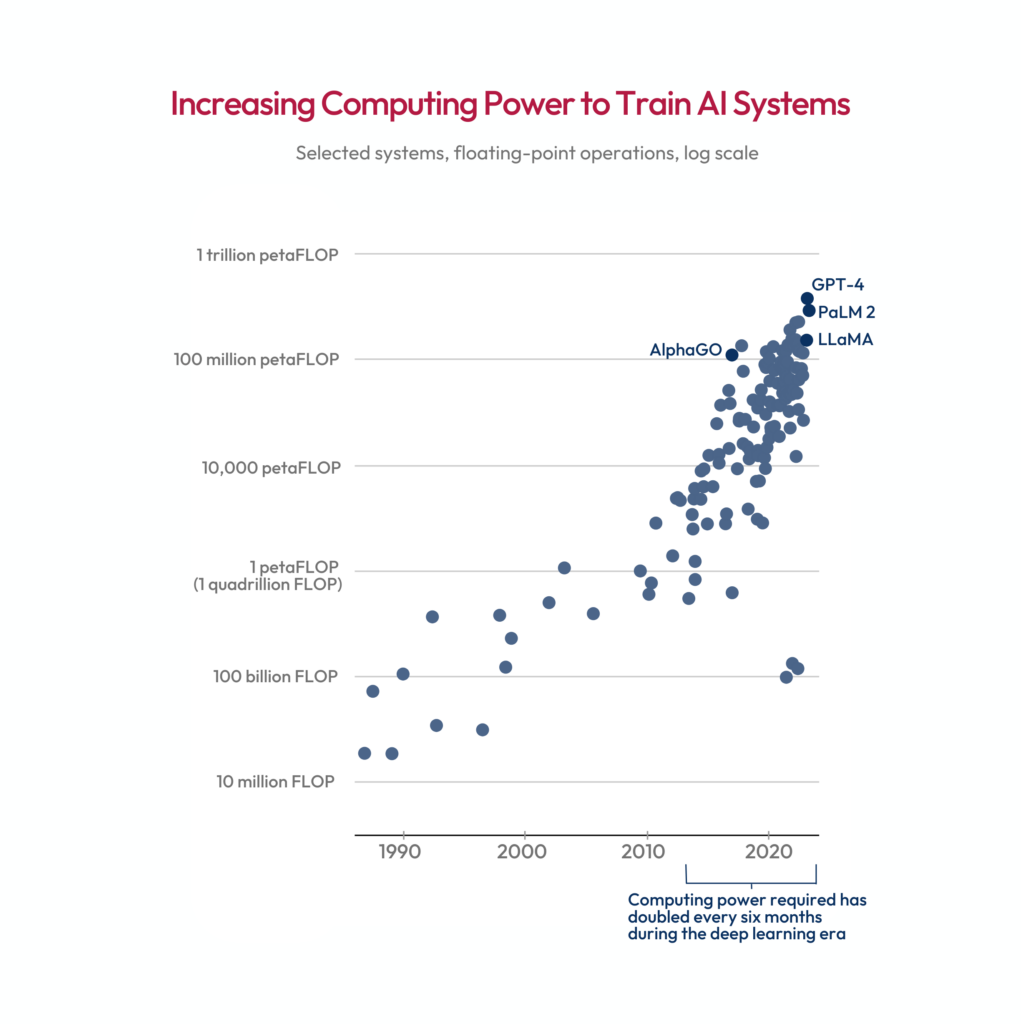

AI models require huge amounts of computing power, both for training runs and for inference (retrieval of information). Over the past decade, compute demand for AI has grown at an alarming rate. Since the first deep neural network-based AI model was demonstrated in 2012, the amount of compute resources needed to train frontier models has increased by roughly 55 million times – a doubling rate of about every six months.22 Today, the largest training runs take months and require supercomputers outfitted with thousands of specialized GPUs.23

Source: SCSP

The United States enjoys a significant lead over the PRC in terms of both technological development and access to compute resources. Nvidia, the world’s leading designer of AI chips, is based in the United States, as are AMD and Intel, its two largest competitors.25 Last year, the United States announced restrictions on the export of cutting-edge AI chips and semiconductor manufacturing equipment to the PRC.26 Controls on the former apply only to the highest-end chips that meet thresholds for performance and interconnect speed. As a result, U.S. firms enjoy easier access to compute resources than PRC counterparts. However, well over 90 percent of the world’s AI chips are manufactured in Taiwan, leaving supply vulnerable for both countries.27 And while China is making compute resources available to academic researchers, the United States has not taken comparable measures.28

To make matters worse, booming compute demand from GenAI comes at a time when chip manufacturing is running up against the laws of physics. The world’s most advanced chips feature patterns just 3 nanometers across, or roughly 25,000 times smaller than the width of a human hair.54 As today’s chip manufacturing techniques push up against physical limits, Moore’s Law – the prediction that the amount of computing power on a chip will double every two years – is slowing down.55

Several pathways exist to meet this challenge. The first is building specialized chips and systems for AI workloads. Over the past few years, for example, AI firms have moved away from using general-purpose chips to train models and instead rely on Graphical Processing Units (GPUs) that can perform many computations in parallel. Building new kinds of specialized AI accelerators, which match custom hardware for specific applications, holds tremendous promise. U.S.-based large technology firms and AI chip startups are moving to capitalize on this trend, suggesting that substantial market incentives already exist.56

New computing paradigms like quantum and neuromorphic computing are well into development worldwide to provide compute gains beyond Moore’s Law. The chip industry is already pursuing 3D heterogeneous integration – scaling Moore’s Law in the third dimension by stacking components and connecting them in new ways.57 DARPA’s Electronics Resurgence Initiative 2.0 is pursuing a robust research program in this area, with a focus on driving rapid commercialization while supporting defense mission requirements.58 But a number of other emerging paradigms exist that could help meet skyrocketing compute demand in the intermediate term: examples include silicon photonics; heterogeneous classical-quantum computing; neuromorphic and in-memory computing; and superconducting electronics.59 Today, many of these paradigms face hurdles to commercialization, making them ideal targets for government investment and other policies to de-risk private investment.60

Key Actions according to the SCSP report include:

Launch moonshot projects focused on incentivizing investments in emerging microelectronics paradigms that could power future generations of AI models. True microelectronics moonshots can occur across the U.S. innovation ecosystem, ranging from companies to DARPA’s Microsystems Technology Office. But who sets the bar for the nation, supports implementation, and reports results to the President? Short of a Technology Competitiveness Council,61 the National Semiconductor Technology Center (NSTC), already funded under the CHIPS and Science Act and scheduled to launch later this year, could be the place. To achieve genuine moonshots, a coordinating mechanism across government programs is needed.62 In addition, the NSTC Investment Fund should be rapidly stood up and sufficiently resourced with at least $1 billion in government seed funding. As part of its mission, the NSTC has an opportunity to serve as an incubator, providing a one-stop-shop “help desk” for innovators as they attempt to navigate the public-private ecosystem.

Create the National Artificial Intelligence Research Resource (NAIRR). To preserve America’s competitive edge in AI research and prevent expertise from becoming concentrated in a handful of companies, the United States must ensure academic researchers can access large amounts of compute resources via the cloud.34 The National Science Foundation (NSF) and OSTP, through their NAIRR Task Force, have released a detailed implementation plan for the NAIRR, supported by input from leading experts, academic institutions, and industry,35 and bipartisan, bicameral Members of Congress have introduced legislation to authorize the NAIRR.36 If authorized, this program should be funded as quickly as possible at $2.6 billion over six years, as requested by NSF and OSTP.37 The United States should also explore the creation of a parallel Democracies’ Artificial Intelligence Research Resource (DAIRR) in concert with key allies and partners.

Implement a know-your-customer regime for cloud providers. Recent reports suggest that sanctioned PRC AI companies are seeking to circumvent the October 7, 2022 restrictions by renting access to controlled GPUs via cloud providers.38 The Department of Commerce should begin by implementing know-your-customer requirements for U.S. cloud providers – as required by Executive Order 1398439 – as well as conducting diplomatic outreach with the Department of State to encourage allies and partners to implement responsible screening practices among their providers.40 These steps can be undertaken with existing resources and should be deployed as quickly as possible.

For the endnotes referenced here of this SCSP report, go to endnotes. For the full report, go to this link.

Computer Chip Supply Chain Vulnerabilities: Chip shortages have already disrupted various industries. The geopolitical aspect of the chip supply chain necessitates comprehensive strategic planning and risk mitigation. See: Chip Stratigame

Technology Convergence and Market Disruption: Rapid advancements in technology are changing market dynamics and user expectations. See: Disruptive and Exponential Technologies.

The New Tech Trinity: Artificial Intelligence, BioTech, Quantum Tech: Will make monumental shifts in the world. This new Tech Trinity will redefine our economy, both threaten and fortify our national security, and revolutionize our intelligence community. None of us are ready for this. This convergence requires a deepened commitment to foresight and preparation and planning on a level that is not occurring anywhere. The New Tech Trinity.

AI Discipline Interdependence: There are concerns about uncontrolled AI growth, with many experts calling for robust AI governance. Both positive and negative impacts of AI need assessment. See: Using AI for Competitive Advantage in Business.

Benefits of Automation and New Technology: Automation, AI, robotics, and Robotic Process Automation are improving business efficiency. New sensors, especially quantum ones, are revolutionizing sectors like healthcare and national security. Advanced WiFi, cellular, and space-based communication technologies are enhancing distributed work capabilities. See: Advanced Automation and New Technologies

Emerging NLP Approaches: While Big Data remains vital, there’s a growing need for efficient small data analysis, especially with potential chip shortages. Cost reductions in training AI models offer promising prospects for business disruptions. Breakthroughs in unsupervised learning could be especially transformative. See: What Leaders Should Know About NLP